Picture by Creator

Why Use Activation Features

Deep Studying and Neural Networks include interconnected nodes, the place information is handed sequentially by way of every hidden layer. Nonetheless, the composition of linear features is inevitably nonetheless a linear perform. Activation features turn out to be essential when we have to be taught complicated and non-linear patterns inside our information.

The 2 main advantages of utilizing activation features are:

Introduces Non-Linearity

Linear relationships are uncommon in real-world situations. Most real-world situations are complicated and comply with a wide range of totally different tendencies. Studying such patterns is unattainable with linear algorithms like Linear and Logistic Regression. Activation features add non-linearity to the mannequin, permitting it to be taught complicated patterns and variance within the information. This permits deep studying fashions to carry out difficult duties together with the picture and language domains.

Enable Deep Neural Layers

As talked about above, after we sequentially apply a number of linear features, the output remains to be a linear mixture of the inputs. Introducing non-linear features between every layer permits them to be taught totally different options of the enter information. With out activation features, having a deeply related neural community structure would be the similar as utilizing fundamental Linear or Logistic Regression algorithms.

Activation features enable deep studying architectures to be taught complicated patterns, making them extra highly effective than easy Machine Studying algorithms.

Let’s have a look at among the commonest activation features utilized in deep studying.

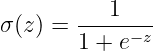

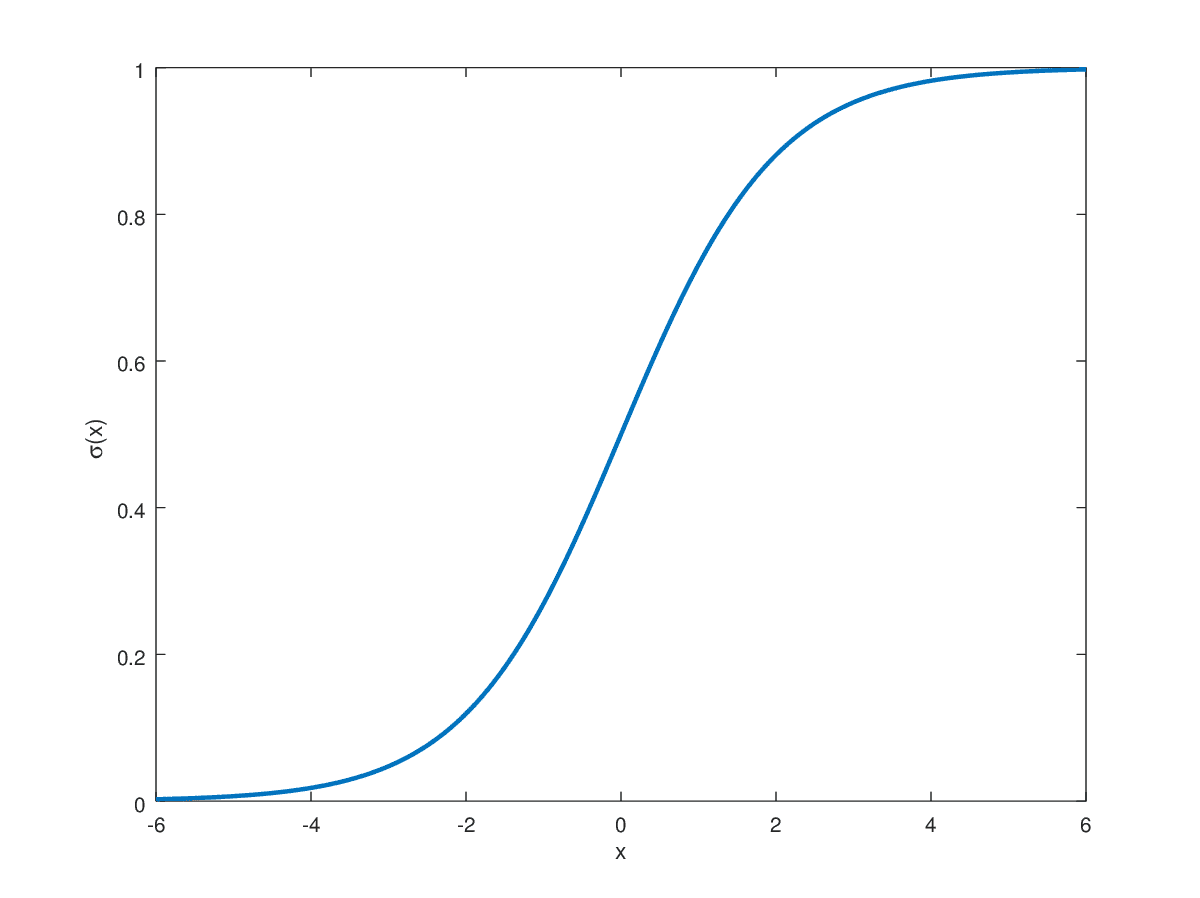

Sigmoid

Generally utilized in binary classification duties, the Sigmoid perform maps real-numbered values between 0 and 1.

The above equation seems as beneath:

Picture by Hvidberrrg

The Sigmoid perform is primarily used within the output layer for binary classification duties the place the goal label is both 0 or 1. This naturally makes Sigmoid preferable for such duties, because the output is restricted between this vary. For extremely optimistic values that method infinity, the sigmoid perform maps them near 1. On the alternative finish, it maps values approaching unfavorable infinity to 0. All real-valued numbers between these are mapped within the vary 0 to 1 in an S-shaped development.

Shortcomings

Saturation Factors

The sigmoid perform poses issues for the gradient descent algorithm throughout backpropagation. Apart from values near the middle of the S-shaped curve, the gradient is extraordinarily near zero inflicting issues for coaching. Near the asymptotes, it may well result in vanishing gradient issues as small gradients can considerably decelerate convergence.

Not Zero-Centered

It is empirically confirmed that having a zero-centered non-linear perform ensures that the imply activation worth is near 0. Having such normalized values ensures quicker convergence of gradient descent in direction of the minima. Though not crucial, having zero-centered activation permits quicker coaching. The Sigmoid perform is centered at 0.5 when the enter is 0. This is among the drawbacks of utilizing Sigmoid in hidden layers.

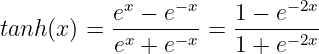

Tanh

The hyperbolic tangent perform is an enchancment over the Sigmoid perform. As an alternative of the [0,1] vary, the TanH perform maps real-valued numbers between -1 and 1.

The Tanh perform seems as beneath:

Picture by Wolfram

The TanH perform follows the identical S-shaped curve because the Sigmoid, however it’s now zero-centered. This enables quicker convergence throughout coaching because it improves on one of many shortcomings of the Sigmoid perform. This makes it extra appropriate to be used in hidden layers in a neural community structure.

Shortcomings

Saturation Factors

The TanH perform follows the identical S-shaped curve because the Sigmoid, however it’s now zero-centered. This enables quicker convergence throughout coaching enhancing upon the Sigmoid perform. This makes it extra appropriate to be used in hidden layers in a neural community structure.

Computational Expense

Though not a serious concern within the modern-day, the exponential calculation is costlier than different widespread alternate options obtainable.

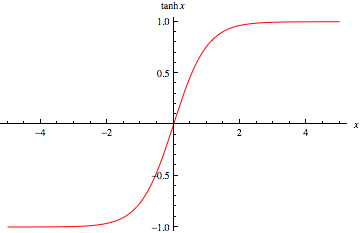

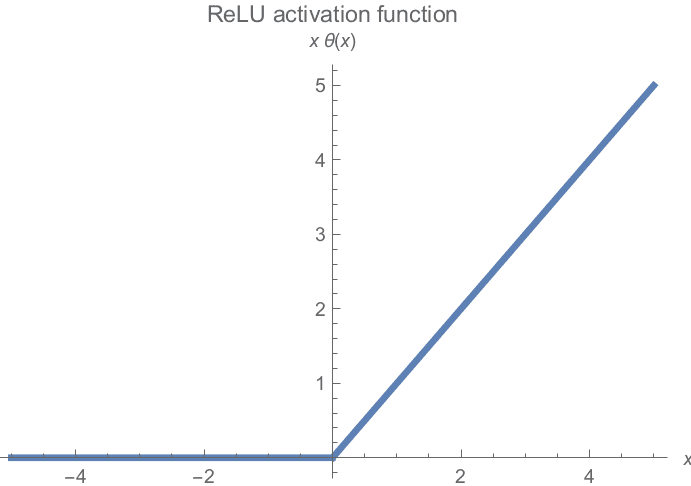

ReLU

Essentially the most generally used activation perform in follow, Rectified Linear Unit Activation (ReLU) is the most straightforward but only potential non-linear perform.

It conserves all non-negative values and clamps all unfavorable values to 0. Visualized, the ReLU features look as follows:

Picture by Michiel Straat

Shortcomings

Dying ReLU

The gradient flattens at one finish of the graph. All unfavorable values have zero gradients, so half of the neurons could have minimal contribution to coaching.

Unbounded Activation

On the right-hand facet of the graph, there is no such thing as a restrict on the potential gradient. This will result in an exploding gradient drawback if the gradient values are too excessive. This concern is generally corrected by Gradient Clipping and Weight Initialization strategies.

Not Zero-Centered

Just like Sigmoid, the ReLU activation perform can also be not zero-centered. Likewise, this causes issues with convergence and might decelerate coaching.

Regardless of all shortcomings, it’s the default selection for all hidden layers in neural community architectures and is empirically confirmed to be extremely environment friendly in follow.

Key Takeaways

Now that we all know in regards to the three commonest activation features, how do we all know what’s the very best selection for our state of affairs?

Though it extremely will depend on the info distribution and particular drawback assertion, there are nonetheless some fundamental beginning factors which might be broadly utilized in follow.

- Sigmoid is simply appropriate for output activations of binary issues when goal labels are both 0 or 1.

- Tanh is now majorly changed by the ReLU and related features. Nonetheless, it’s nonetheless utilized in hidden layers for RNNs.

- In all different situations, ReLU is the default selection for hidden layers in deep studying architectures.

Muhammad Arham is a Deep Studying Engineer working in Pc Imaginative and prescient and Pure Language Processing. He has labored on the deployment and optimizations of a number of generative AI purposes that reached the worldwide high charts at Vyro.AI. He’s concerned with constructing and optimizing machine studying fashions for clever techniques and believes in continuous enchancment.