How typically do machine studying tasks attain profitable deployment? Not typically sufficient. There’s loads of {industry} analysis displaying that ML tasks generally fail to ship returns, however valuable few have gauged the ratio of failure to success from the angle of information scientists – the parents who develop the very fashions these tasks are supposed to deploy.

Following up on an information scientist survey that I carried out with KDnuggets final yr, this yr’s industry-leading Information Science Survey run by ML consultancy Rexer Analytics addressed the query – partly as a result of Karl Rexer, the corporate’s founder and president, allowed yours actually to take part, driving the inclusion of questions on deployment success (a part of my work throughout a one-year analytics professorship I held at UVA Darden).

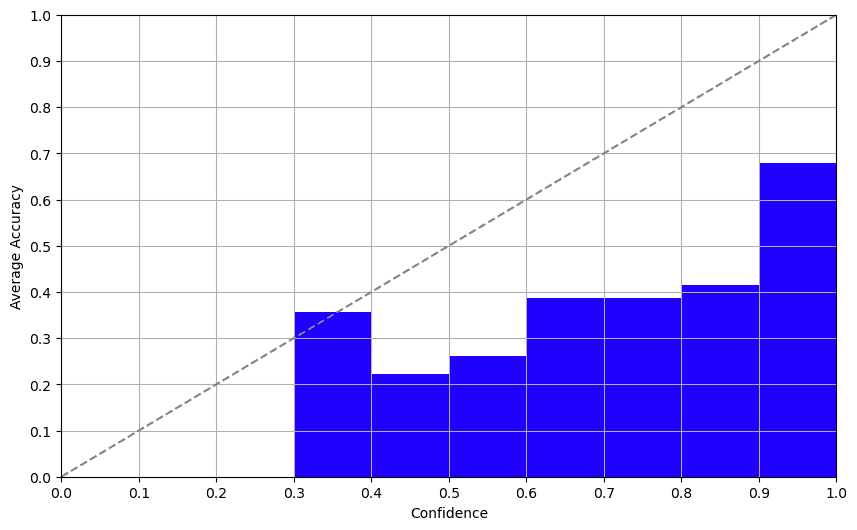

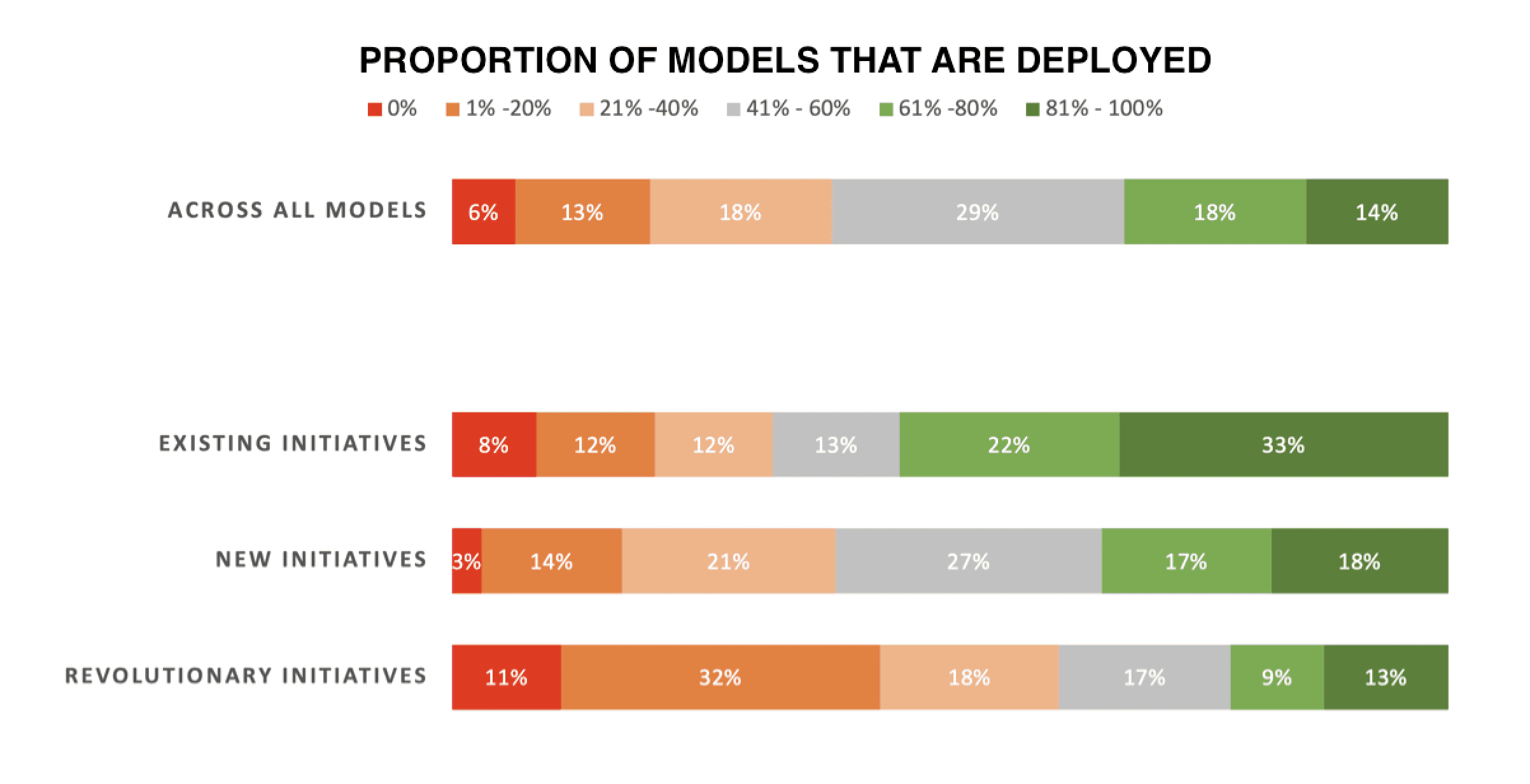

The information is not nice. Solely 22% of information scientists say their “revolutionary” initiatives – fashions developed to allow a brand new course of or functionality – normally deploy. 43% say that 80% or extra fail to deploy.

Throughout all sorts of ML tasks – together with refreshing fashions for current deployments – solely 32% say that their fashions normally deploy.

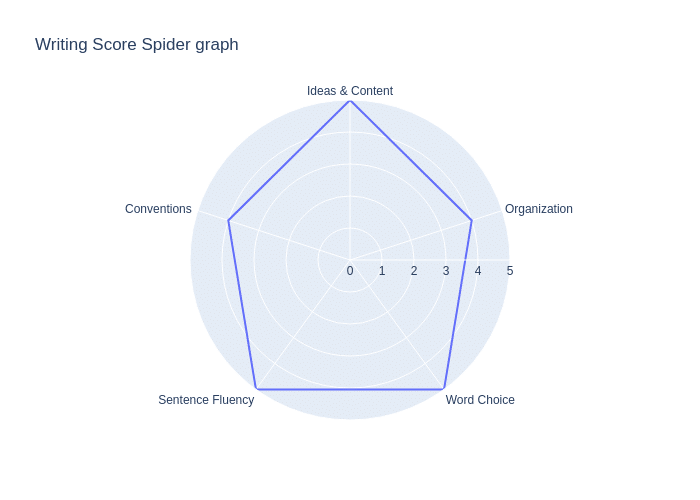

Listed here are the detailed outcomes of that a part of the survey, as offered by Rexer Analytics, breaking down deployment charges throughout three sorts of ML initiatives:

Key:

- Current initiatives: Fashions developed to replace/refresh an current mannequin that is already been efficiently deployed

- New initiatives: Fashions developed to boost an current course of for which no mannequin was already deployed

- Revolutionary initiatives: Fashions developed to allow a brand new course of or functionality

The Drawback: Stakeholders Lack Visibility and Deployment Is not Absolutely Deliberate For

In my opinion, this wrestle to deploy stems from two fundamental contributing elements: endemic under-planning and enterprise stakeholders missing concrete visibility. Many knowledge professionals and enterprise leaders haven’t come to acknowledge that ML’s meant operationalization should be deliberate in nice element and pursued aggressively from the inception of each ML mission.

The truth is, I’ve written a brand new guide about simply that: The AI Playbook: Mastering the Uncommon Artwork of Machine Studying Deployment. On this guide, I introduce a deployment-focused, six-step apply for ushering machine studying tasks from conception to deployment that I name bizML.

An ML mission’s key stakeholder – the individual answerable for the operational effectiveness focused for enchancment, akin to a line-of-business supervisor – wants visibility into exactly how ML will enhance their operations and the way a lot worth the advance is anticipated to ship. They want this to in the end greenlight a mannequin’s deployment in addition to to, earlier than that, weigh in on the mission’s execution all through the pre-deployment levels.

However ML’s efficiency typically is not measured! When the Rexer survey requested, “How typically does your organization / group measure the efficiency of analytic tasks?” solely 48% of information scientists mentioned “At all times” or “More often than not.” That is fairly wild. It must be extra like 99% or 100%.

And when efficiency is measured, it is when it comes to technical metrics which can be arcane and principally irrelevant to enterprise stakeholders. Information scientists know higher, however typically don’t abide – partly since ML instruments typically solely serve up technical metrics. Based on the survey, knowledge scientists rank enterprise KPIs like ROI and income as crucial metrics, but they listing technical metrics like elevate and AUC as those mostly measured.

Technical efficiency metrics are “basically ineffective to and disconnected from enterprise stakeholders,” based on Harvard Information Science Evaluation. Right here’s why: They solely let you know the relative efficiency of a mannequin, akin to the way it compares to guessing or one other baseline. Enterprise metrics let you know the absolute enterprise worth the mannequin is anticipated to ship – or, when evaluating after deployment, that it has confirmed to ship. Such metrics are important for deployment-focused ML tasks.

The Semi-Technical Understanding Enterprise Stakeholders Want

Past entry to enterprise metrics, enterprise stakeholders additionally must ramp up. When the Rexer survey requested, “Are the managers and decision-makers at your group who should approve mannequin deployment typically educated sufficient to make such choices in a well-informed method?” solely 49% of respondents answered “More often than not” or “At all times.”

Here is what I consider is occurring. The info scientist’s “shopper,” the enterprise stakeholder, typically will get chilly ft when it comes right down to authorizing deployment, since it will imply making a major operational change to the corporate’s bread and butter, its largest scale processes. They do not have the contextual framework. For instance, they surprise, “How am I to grasp how a lot this mannequin, which performs far shy of crystal-ball perfection, will truly assist?” Thus the mission dies. Then, creatively placing some sort of a constructive spin on the “insights gained” serves to neatly sweep the failure beneath the rug. AI hype stays intact even whereas the potential worth, the aim of the mission, is misplaced.

On this subject – ramping up stakeholders – I will plug my new guide, The AI Playbook, only one extra time. Whereas protecting the bizML apply, the guide additionally upskills enterprise professionals by delivering a significant but pleasant dose of semi-technical background data that every one stakeholders want in an effort to lead or take part in machine studying tasks, finish to finish. This places enterprise and knowledge professionals on the identical web page in order that they’ll collaborate deeply, collectively establishing exactly what machine studying known as upon to foretell, how effectively it predicts, and the way its predictions are acted upon to enhance operations. These necessities make or break every initiative – getting them proper paves the best way for machine studying’s value-driven deployment.

It’s protected to say that it’s rocky on the market, particularly for brand new, first-try ML initiatives. Because the sheer pressure of AI hype loses its capability to repeatedly make up for much less realized worth than promised, there will be increasingly more strain to show ML’s operational worth. So I say, get out forward of this now – begin instilling a more practical tradition of cross-enterprise collaboration and deployment-oriented mission management!

For extra detailed outcomes from the 2023 Rexer Analytics Information Science Survey, click on right here. That is the most important survey of information science and analytics professionals within the {industry}. It consists of roughly 35 a number of alternative and open-ended questions that cowl way more than solely deployment success charges – seven basic areas of information mining science and apply: (1) Discipline and targets, (2) Algorithms, (3) Fashions, (4) Instruments (software program packages used), (5) Expertise, (6) Challenges, and (7) Future. It’s carried out as a service (with out company sponsorship) to the information science group, and the outcomes are normally introduced on the Machine Studying Week convention and shared by way of freely out there abstract reviews.

This text is a product of the creator’s work whereas he held a one-year place because the Bodily Bicentennial Professor in Analytics on the UVA Darden College of Enterprise, which in the end culminated with the publication of The AI Playbook: Mastering the Uncommon Artwork of Machine Studying Deployment.

Eric Siegel, Ph.D. is a number one marketing consultant and former Columbia College professor who helps corporations deploy machine studying. He’s the founding father of the long-running Machine Studying Week convention collection, the trainer of the acclaimed on-line course ‘Machine Studying Management and Apply – Finish-to-Finish Mastery,’ govt editor of The Machine Studying Occasions, and a frequent keynote speaker. He wrote the bestselling Predictive Analytics: The Energy to Predict Who Will Click on, Purchase, Lie, or Die, which has been utilized in programs at lots of of universities, in addition to The AI Playbook: Mastering the Uncommon Artwork of Machine Studying Deployment. Eric’s interdisciplinary work bridges the cussed expertise/enterprise hole. At Columbia, he gained the Distinguished School award when educating the graduate laptop science programs in ML and AI. Later, he served as a enterprise faculty professor at UVA Darden. Eric additionally publishes op-eds on analytics and social justice.