Picture by Writer

What’s Rust Burn?

Rust Burn is a brand new deep studying framework written totally within the Rust programming language. The motivation behind creating a brand new framework reasonably than utilizing current ones like PyTorch or TensorFlow is to construct a flexible framework that caters nicely to varied customers together with researchers, machine studying engineers, and low-level software program engineers.

The important thing design ideas behind Rust Burn are flexibility, efficiency, and ease of use.

Flexibility comes from the flexibility to swiftly implement cutting-edge analysis concepts and run experiments.

Efficiency is achieved by means of optimizations like leveraging hardware-specific options comparable to Tensor Cores on Nvidia GPUs.

Ease of use stems from simplifying the workflow of coaching, deploying, and working fashions in manufacturing.

Key Options:

- Versatile and dynamic computational graph

- Thread-safe information buildings

- Intuitive abstractions for simplified growth course of

- Blazingly quick efficiency throughout coaching and inference

- Helps a number of backend implementations for each CPU and GPU

- Full help for logging, metric, and checkpointing throughout coaching

- Small however energetic developer neighborhood

Getting Began

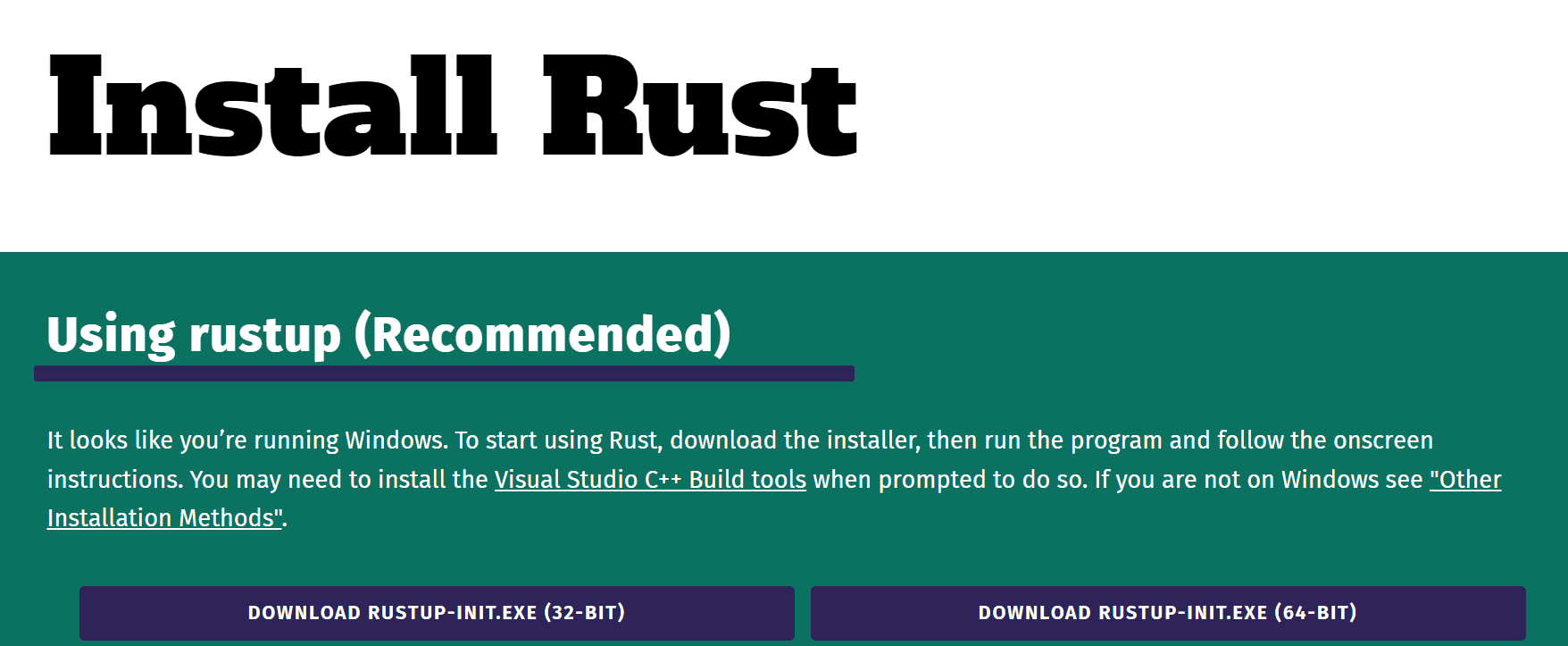

Putting in Rust

Burn is a robust deep studying framework that’s primarily based on Rust programming language. It requires a primary understanding of Rust, however as soon as you’ve got bought that down, you can benefit from all of the options that Burn has to supply.

To put in it utilizing an official information. You can even take a look at GeeksforGeeks information for putting in Rust on Home windows and Linux with screenshots.

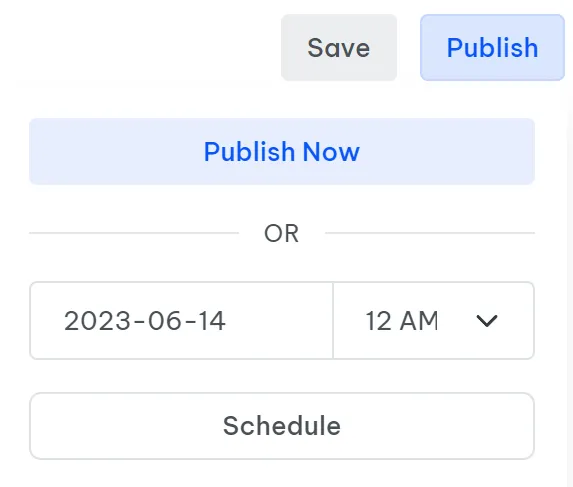

Picture from Set up Rust

Putting in Burn

To make use of Rust Burn, you first have to have Rust put in in your system. As soon as Rust is accurately arrange, you may create a brand new Rust software utilizing cargo, Rust’s package deal supervisor.

Run the next command in your present listing:

cargo new new_burn_app

Navigate into this new listing:

cd new_burn_app

Subsequent, add Burn as a dependency, together with the WGPU backend characteristic which allows GPU operations:

cargo add burn --features wgpu

In the long run, compile the undertaking to put in Burn:

cargo construct

This may set up the Burn framework together with the WGPU backend. WGPU permits Burn to execute low-level GPU operations.

Instance Code

Aspect Smart Addition

To run the next code you must open and change content material in src/major.rs:

use burn::tensor::Tensor;

use burn::backend::WgpuBackend;

// Sort alias for the backend to make use of.

kind Backend = WgpuBackend;

fn major() {

// Creation of two tensors, the primary with specific values and the second with ones, with the identical form as the primary

let tensor_1 = Tensor::

::from_data([[2., 3.], [4., 5.]]); let tensor_2 = Tensor::

::ones_like(&tensor_1); // Print the element-wise addition (accomplished with the WGPU backend) of the 2 tensors. println!("{}", tensor_1 + tensor_2); }

In the primary perform, we’ve got created two tensors with WGPU backend and carried out addition.

To execute the code, you need to run cargo run within the terminal.

Output:

You need to now have the ability to view the end result of the addition.

Tensor {

information: [[3.0, 4.0], [5.0, 6.0]],

form: [2, 2],

machine: BestAvailable,

backend: "wgpu",

variety: "Float",

dtype: "f32",

}

Word: the next code is an instance from Burn E book: Getting began.

Place Smart Feed Ahead Module

Right here is an instance of how straightforward it’s to make use of the framework. We declare a position-wise feed-forward module and its ahead cross utilizing this code snippet.

use burn::nn;

use burn::module::Module;

use burn::tensor::backend::Backend;

#[derive(Module, Debug)]

pub struct PositionWiseFeedForward<B: Backend> {

linear_inner: Linear<B>,

linear_outer: Linear<B>,

dropout: Dropout,

gelu: GELU,

}

impl

PositionWiseFeedForward<B> { pub fn ahead

(&self, enter: Tensor<B, D>) -> Tensor<B, D> { let x = self.linear_inner.ahead(enter); let x = self.gelu.ahead(x); let x = self.dropout.ahead(x); self.linear_outer.ahead(x) } }

The above code is from the GitHub repository.

Instance Initiatives

To find out about extra examples and run them, clone the https://github.com/burn-rs/burn repository and run the initiatives under:

- MNIST: Practice a mannequin on both CPU or GPU utilizing numerous backends.

- MNIST Inference Internet: Mannequin inference within the browser.

- Textual content Classification: Practice a transformer encoder from scratch on GPU.

- Textual content Technology: Construct and practice autoregressive transformer from scratch on GPU.

Pre-trained Fashions

To construct your AI software, you should use the next pre-trained fashions and fine-tune them together with your dataset.

- SqueezeNet: squeezenet-burn

- Llama 2: Gadersd/llama2-burn

- Whisper: Gadersd/whisper-burn

- Secure Diffusion v1.4: Gadersd/stable-diffusion-burn

Conclusion

Rust Burn represents an thrilling new choice within the deep studying framework panorama. If you’re already a Rust developer, you may leverage Rust’s pace, security, and concurrency to push the boundaries of what is potential in deep studying analysis and manufacturing. Burn units out to seek out the precise compromises in flexibility, efficiency, and usefulness to create a uniquely versatile framework appropriate for numerous use instances.

Whereas nonetheless in its early levels, Burn exhibits promise in tackling ache factors of current frameworks and serving the wants of assorted practitioners within the discipline. Because the framework matures and the neighborhood round it grows, it has the potential to change into a production-ready framework on par with established choices. Its contemporary design and language selection supply new potentialities for the deep studying neighborhood.

Sources

- Documenatiatin: https://burn-rs.github.io/ebook/overview.html

- Web site: https://burn-rs.github.io/

- GitHub: https://github.com/burn-rs/burn

- Demo: https://burn-rs.github.io/demo

Abid Ali Awan (@1abidaliawan) is an authorized information scientist skilled who loves constructing machine studying fashions. At the moment, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students combating psychological sickness.