Illustration by Creator

Constructing a machine studying mannequin that generalizes effectively on new knowledge may be very difficult. It must be evaluated to grasp if the mannequin is sufficient good or wants some modifications to enhance the efficiency.

If the mannequin doesn’t study sufficient of the patterns from the coaching set, it can carry out badly on each coaching and check units. That is the so-called underfitting downside.

Studying an excessive amount of in regards to the patterns of coaching knowledge, even the noise, will lead the mannequin to carry out very effectively on the coaching set, however it can work poorly on the check set. This case is overfitting. The generalization of the mannequin will be obtained if the performances measured each in coaching and check units are comparable.

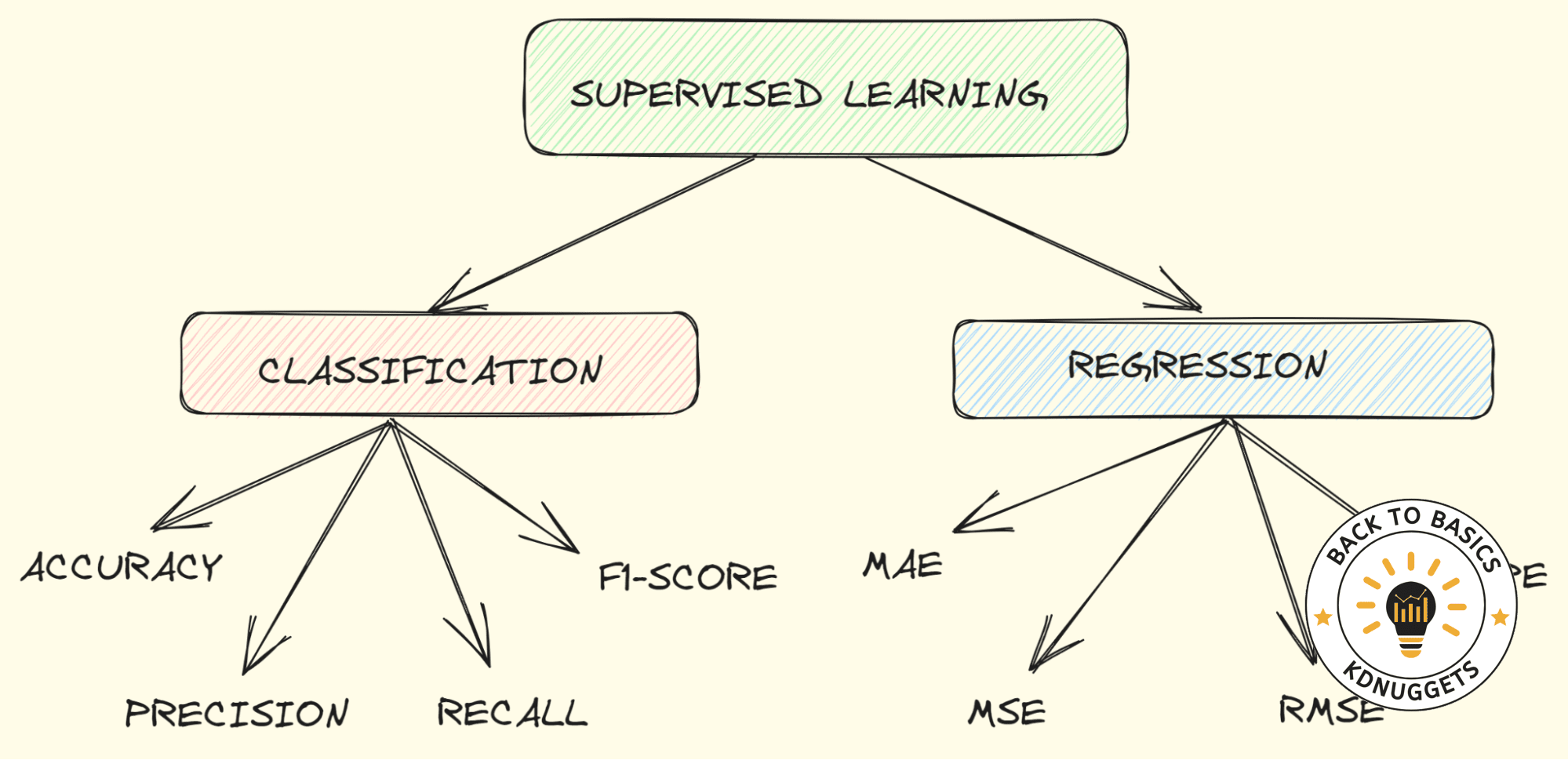

On this article, we’re going to see a very powerful analysis metrics for classification and regression issues that may assist to confirm if the mannequin is capturing effectively the patterns from the coaching pattern and performing effectively on unknown knowledge. Let’s get began!

Classification

When our goal is categorical, we’re coping with a classification downside. The selection of probably the most acceptable metrics will depend on completely different features, such because the traits of the dataset, whether or not it’s imbalanced or not, and the objectives of the evaluation.

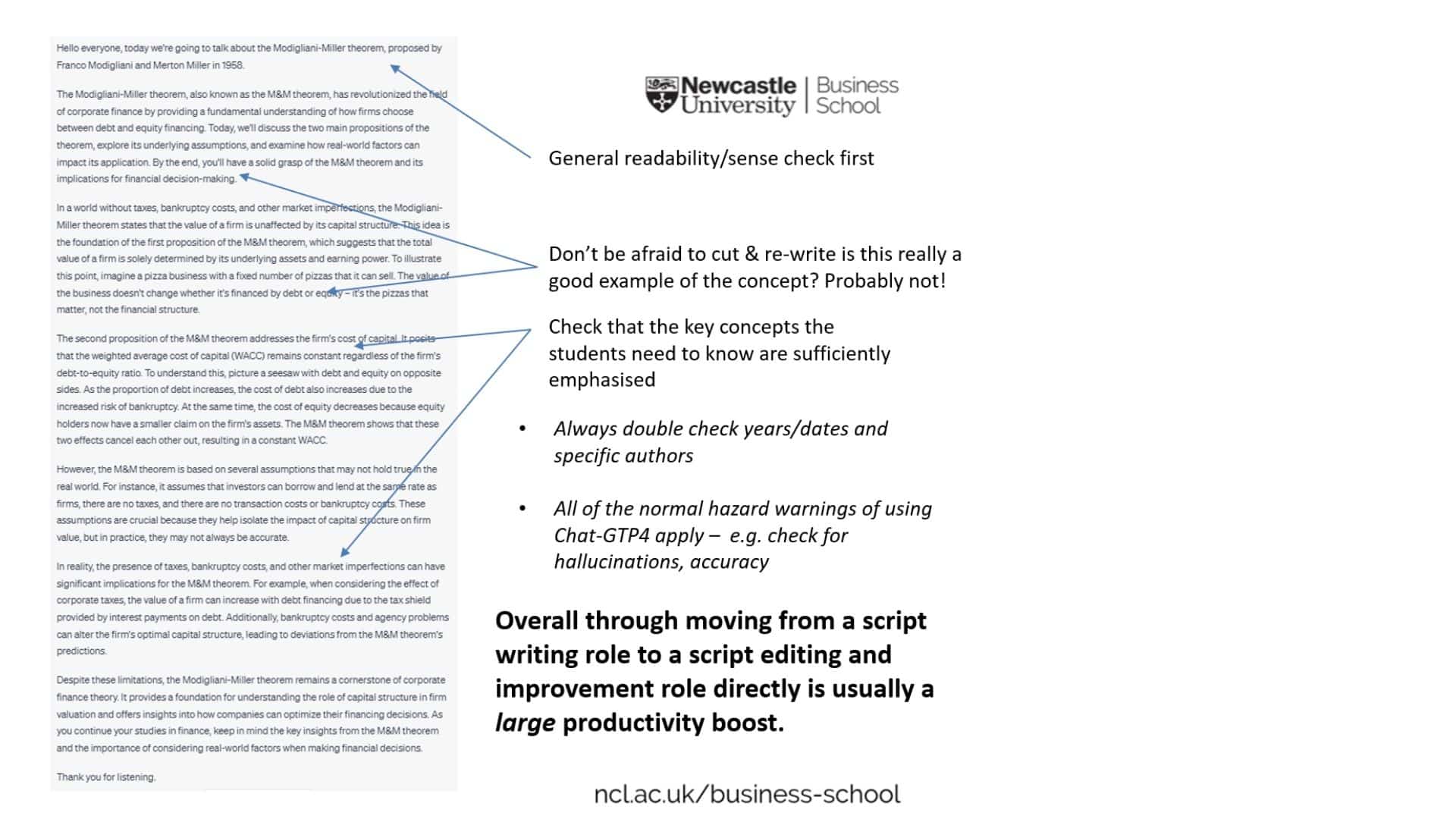

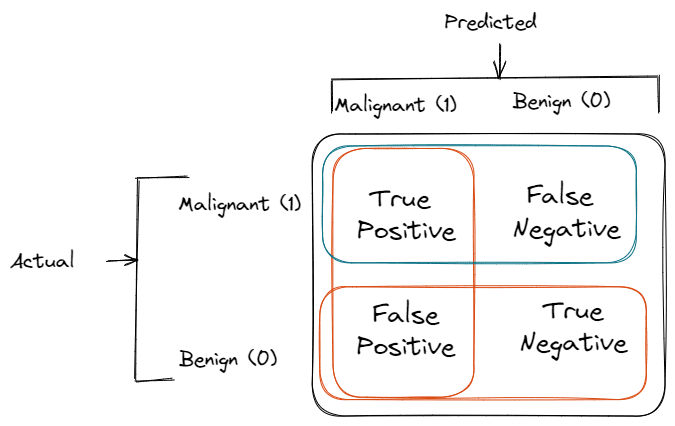

Earlier than displaying the analysis metrics, there is a vital desk that must be defined, known as Confusion Matrix, that summarizes effectively the efficiency of a classification mannequin.

Let’s say that we wish to prepare a mannequin to detect breast most cancers from an ultrasound picture. Now we have solely two courses, malignant and benign.

- True Positives: The variety of terminally sick folks which might be predicted to have a malignant most cancers

- True Negatives: The variety of wholesome folks which might be predicted to have a benign most cancers

- False Positives: The variety of wholesome folks which might be predicted to have malignant most cancers

- False Negatives: The variety of terminally sick folks that predicted to have benign most cancers

Instance of Confusion Matrix. Illustration by Creator.

Accuracy

Accuracy is without doubt one of the most identified and in style metrics to guage a classification mannequin. It’s the fraction of the corrected predictions divided by the variety of Samples.

The Accuracy is employed once we are conscious that the dataset is balanced. So, every class of the output variable has the identical variety of observations.

Utilizing Accuracy, we are able to reply the query “Is the mannequin predicting appropriately all of the courses?”. For that reason, we’ve got the proper predictions of each the constructive class (malignant most cancers) and the unfavourable class (benign most cancers).

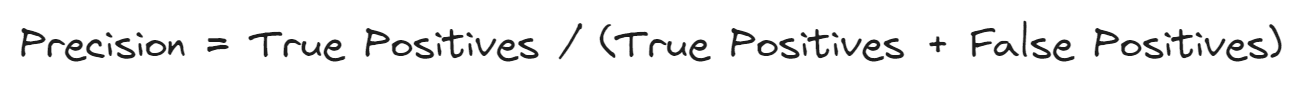

Precision

In another way from Accuracy, Precision is an analysis metric for classification used when the courses are imbalanced.

Precision reply to the next query: “What quantity of malignant most cancers identifications was really right?”. It’s calculated because the ratio between True Positives and Optimistic Predictions.

We’re involved in utilizing Precision if we’re fearful about False Positives and we wish to decrease it. It could be higher to keep away from operating the lives of wholesome folks with faux information of a malignant most cancers.

The decrease the variety of False Positives, the upper the Precision will probably be.

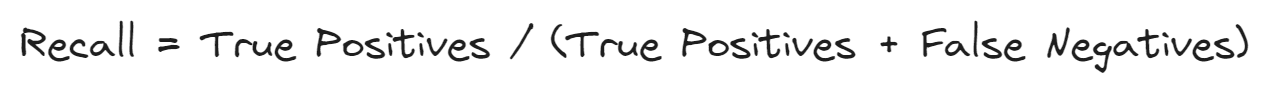

Recall

Along with Precision, Recall is one other metric utilized when the courses of the output variable have a unique variety of observations. Recall solutions to the next query: “What quantity of sufferers with malignant most cancers I used to be capable of acknowledge?”.

We care about Recall if our consideration is targeted on the False Negatives. A false unfavourable implies that that affected person has a malignant most cancers, however we weren’t capable of establish it. Then, each Recall and Precision ought to be monitored to acquire the fascinating good efficiency on unknown knowledge.

F1-Rating

Monitoring each Precision and Recall will be messy and it could be preferable to have a measure that summarizes each these measures. That is attainable with the F1-score, which is outlined because the harmonic imply of precision and recall.

A excessive f1-score is justified by the truth that each Precision and Recall have excessive values. If recall or precision has low values, the f1-score will probably be penalized and, then, may have a low worth too.

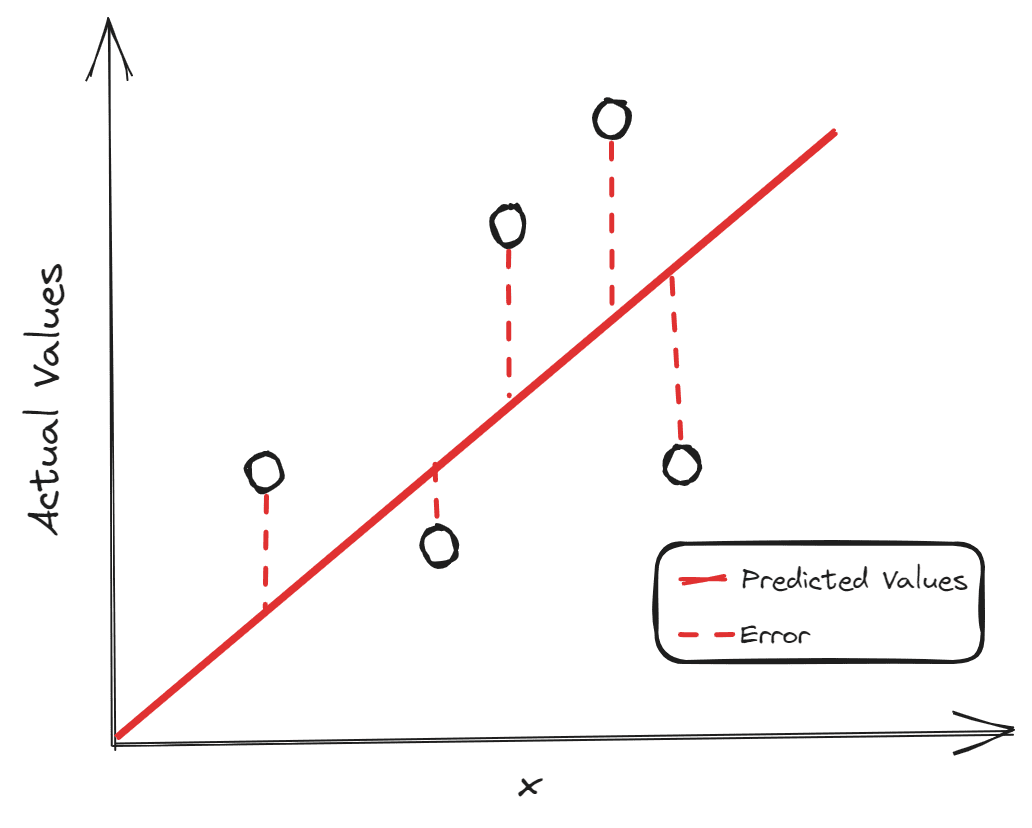

Regression

Illustration by Creator

When the output variable is numerical, we’re coping with a regression downside. As within the classification downside, it’s essential to decide on the metric for evaluating the regression mannequin, relying on the needs of the evaluation.

The preferred instance of a regression downside is the prediction of home costs. Are we involved in predicting precisely the home costs? Or will we simply care about minimizing the general error?

In all these metrics, the constructing block is the residual, which is the distinction between predicted values and precise values.

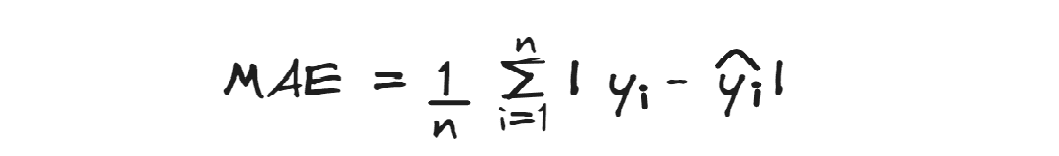

MAE

The Imply Absolute Error calculates the common absolute residuals.

It doesn’t penalize excessive errors as a lot as different analysis metrics. Each error is handled equally, even the errors of outliers, so this metric is powerful to outliers. Furthermore, absolutely the worth of the variations ignores the path of error.

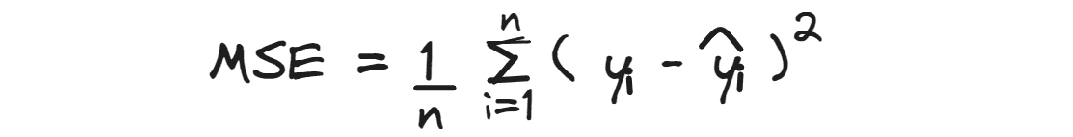

MSE

The Imply Squared Error calculates the common squared residuals.

Because the variations between predicted and precise values are squared, It provides extra weight to increased errors,

so it may be helpful when huge errors usually are not fascinating, slightly than minimizing the general error.

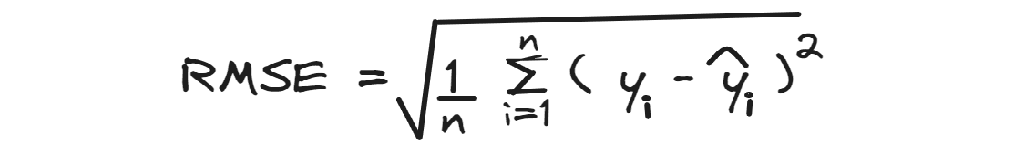

RMSE

The Root Imply Squared Error calculates the sq. root of the common squared residuals.

Once you perceive MSE, you retain a second to know the Root Imply Squared Error, which is simply the sq. root of MSE.

The great level of RMSE is that it’s simpler to interpret for the reason that metric is within the scale of the goal variable. Apart from the form, it’s similar to MSE: it at all times provides extra weight to increased variations.

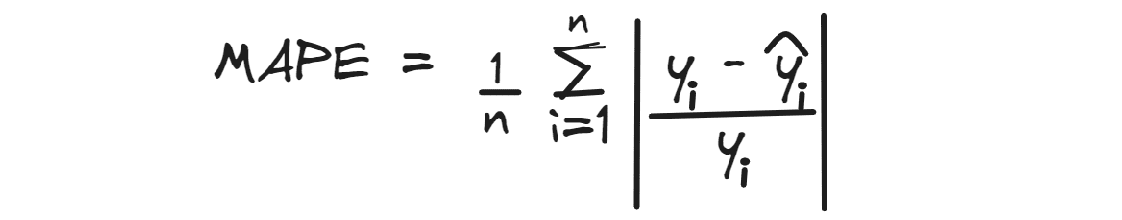

MAPE

Imply Absolute Share Error calculates the common absolute proportion distinction between predicted values and precise values.

Like MAE, it disregards the path of the error and the absolute best worth is ideally 0.

For instance, if we receive a MAPE with a worth of 0.3 for predicting home costs, it implies that, on common, the predictions are beneath of 30%.

Closing Ideas

I hope that you’ve got loved this overview of the analysis metrics. I simply coated a very powerful measures for evaluating the efficiency of classification and regression fashions. In case you have found different life-saving metrics, that helped you on fixing an issue, however they don’t seem to be nominated right here, drop them within the feedback.

Eugenia Anello is at present a analysis fellow on the Division of Data Engineering of the College of Padova, Italy. Her analysis challenge is targeted on Continuous Studying mixed with Anomaly Detection.