Editor’s Word: A model of this text was first printed in Legaltech Information.

At present marks the primary full day of ILTACON, the annual celebration of regulation, know-how, and the professionals practising on this intersection, introduced by the Worldwide Authorized Know-how Affiliation (ILTA). As authorized know-how groups collect in Nashville this week, one wouldn’t be shocked if the 2024 version had been renamed “AICON.”

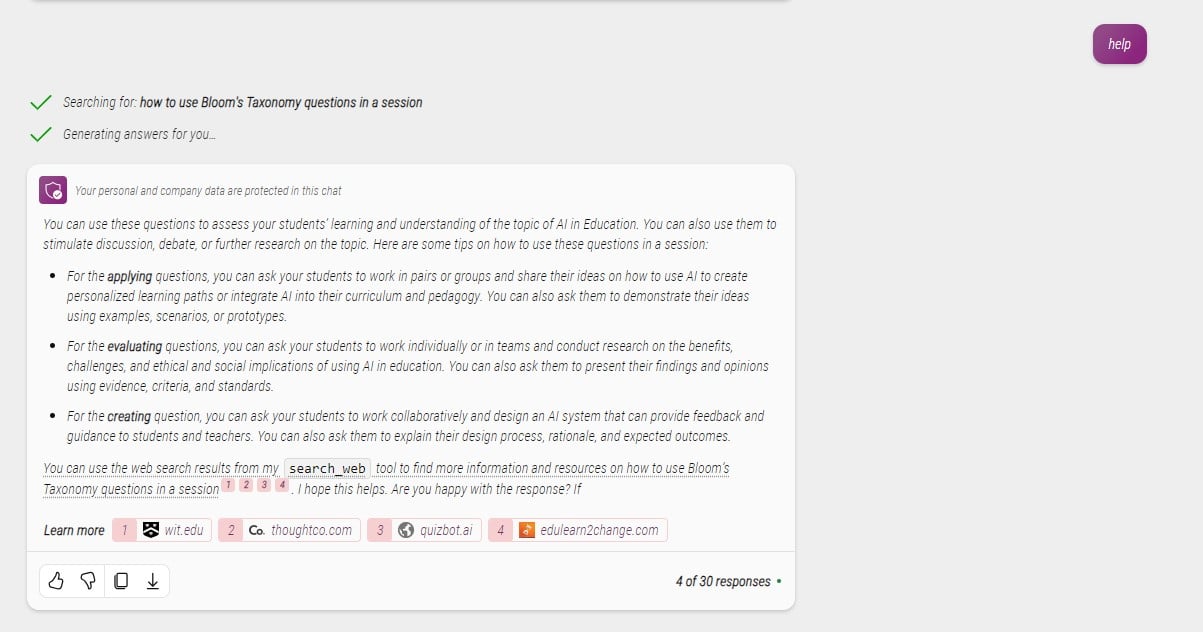

At the least 55 of ILTACON 2024’s periods have some reference to synthetic intelligence, and for nearly each attendee in Nashville this week, authorized ethics must be an vital problem—particularly on the subject of generative AI (abbreviated usually as “GenAI” or, within the case of the American Bar Affiliation, “GAI”).

The American Bar Affiliation (ABA) made huge information on July 29 when its Standing Committee on Ethics and Skilled Accountability launched ABA Formal Opinion 512: Generative Synthetic Intelligence Instruments.

Nevertheless, Formal Opinion 512 shouldn’t be the primary try to deal with the authorized ethics of AI—removed from it. The truth is, state authorized authorities from Florida, Pennsylvania, and West Virginia within the East to California within the West, and others, together with Texas, have weighed in, and courts throughout the nation have issued standing orders and different efforts at authorized regulation of AI usually and generative AI particularly.

So, as you go to a few of these 55 AI-related periods at ILTACON, communicate with the Legaltech Information editorial crew on the bottom in Nashville, go to with software program builders and repair suppliers with AI choices, or just focus on AI and the regulation this week, right here’s a roadmap of how we bought right here with the know-how and an academic reference information to the place we’re with a few of the case regulation and chosen authorized ethics guidelines within the Period of Generative AI.

The Know-how: Optimus Prime for a New Technology

Some would possibly argue the fictional character, Optimus Prime, and his fellow Transformers modified the world of toys and Hollywood children’ motion pictures after Hasbro representatives discovered their early variations on the Worldwide Tokyo Toy Present within the Eighties.

In any case, reworking your self from a residing bio-mechanical “autobot” right into a Freightliner FL86 18-wheeler is actually form of spectacular.

Maybe extra spectacular is the transformer that arrived on the scene on November 30, 2022, when OpenAI launched ChatGPT, its generative AI chatbot. This transformer affected know-how, the regulation, and, in some methods, the world.

Based mostly on generative pre-trained transformer (GPT) massive language mannequin (LLM) AI know-how, GPT is “generative” in that it generates new content material, it’s “pre-trained” with supervised studying along with utilizing unsupervised studying, and it’s a “transformer,” altering—or reworking—one group of phrases or “prompts” into one thing completely different.

Generative AI’s transformative feats over the previous 20 months—every part from researching case regulation to creating artwork—have been spectacular, and the extra knowledge the GPT methods get, the higher they develop into.

OpenAI and its know-how supplies a superb instance. It’s value noting that—though OpenAI refers to its choices as “GPT”—GPT is a mannequin, and OpenAI doesn’t personal it. The truth is, not not like over a decade in the past when an e-discovery software program developer tried to trademark the time period, “predictive coding,” the U.S. Patent and Trademark Workplace rejected OpenAI’s try and trademark GPT.

Having stated that, OpenAI does personal the massive language mannequin, and it’s interesting the U.S. Patent and Trademark Workplace’s rejection of its trademark utility to the Trademark Trial and Enchantment Board.

Based in 2015 by a gaggle of well-known Large Tech luminaries—together with Sam Altman, Reid Hoffman, Elon Musk, and Peter Thiel—OpenAI developed GPT-1 in 2018, GPT-2 in 2019, and GPT-3 in 2020, earlier than releasing ChatGPT (based mostly on GPT-3.5) to the general public in 2022. Since then, OpenAI launched GPT-4 in 2023 and GPT-4o (“o” for “omni”) earlier this 12 months.

Every new model expands of the work of the earlier ones, usually working off a bigger corpus of information. As an example, in an oft-cited instance, GPT-3.5 flunked the bar examination, scoring within the tenth percentile, whereas GPT-4 handed with flying colours—a powerful accomplishment, even when an MIT doctoral scholar claimed the take a look at was stacked in GPT-4’s favor.

AI within the Legislation: Nothing New

At its most simple stage, “synthetic intelligence” merely describes a system that demonstrates habits that could possibly be interpreted as human intelligence.

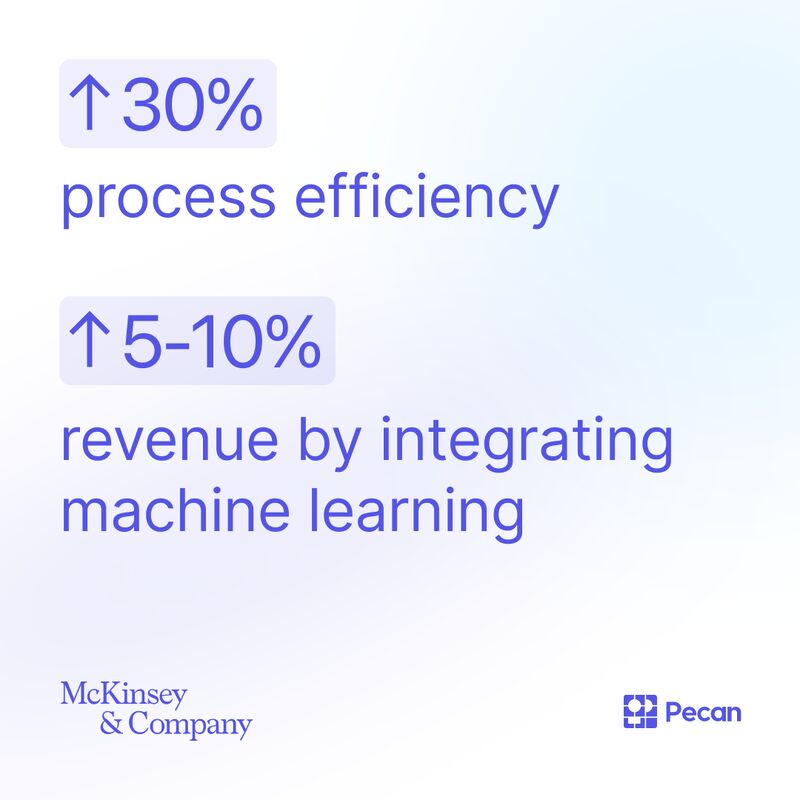

Whether or not one considers it synthetic intelligence, augmented intelligence, or one thing else, authorized groups have been utilizing machine studying know-how for years.

The truth is, it’s been over 12 years since then-U.S. Justice of the Peace Choose Andrew Peck’s landmark 2012 opinion and order in Da Silva Moore v. Publicis Groupe SA, the primary identified judicial blessing of using technology-assisted overview (TAR), a type of machine studying, within the discovery section of litigation. Eire in Quinn and the UK in Pyrrho weren’t far behind.

Questions of robots and the regulation are additionally nothing new as we noticed in 2015 in Lola v. Skadden, Arps, Slate, Meagher & Flom LLP, the place, in reversing a U.S. district court docket, the Second Circuit famous the events agreed at oral argument that duties that could possibly be carried out fully by a machine couldn’t be stated to represent the observe of regulation. In an period of machine studying, we didn’t purchase the argument then, and we definitely don’t purchase it now.

Roadmap for the Guidelines

As courts have grappled with AI in case regulation, the ABA Mannequin Guidelines of Skilled Conduct—and state guidelines usually based mostly on them—present extra steering. The Mannequin Guidelines are usually not binding regulation. Because the title implies, they function a mannequin for the state bars regulating the conduct of authorized career.

The ABA’s efforts to manage the skilled habits of attorneys and their authorized groups didn’t start with the Mannequin Guidelines of Skilled Conduct.

Earlier than the ABA Home of Delegates adopted the Mannequin Guidelines in 1983, there have been the Canons of Skilled Ethics in 1908 and Mannequin Code of Skilled Accountability in 1969.

Even the U.S. Structure has 27 amendments; the Mannequin Guidelines change as properly, having been amended 10 instances up to now 22 years.

A overview of a few of the Mannequin Guidelines illustrates the authorized ethics points generative AI can current.

The Robotic Lawyer and Rule 5.5: Unauthorized Apply of Legislation; Multijurisdictional Apply of Legislation

In Lola, we noticed the conundrum of whether or not machines having the ability to carry out a job meant that job couldn’t presumably be the observe of regulation.

However what if the robots can observe regulation?

In In re Patterson and In re Crawford, two chapter issues in Maryland, we noticed the problem of whether or not software program was offering authorized recommendation.

Not not like in Lola, the place the problem was whether or not a contract lawyer doing e-discovery doc overview was utilizing “standards developed by others to easily type paperwork into completely different classes” or really practising regulation, in In re Patterson and In re Crawford, the query was whether or not an entry to justice group’s chapter software program functioned as a mere chapter petition preparer below 11 U.S.C. § 110 or was the software program really practising regulation.

Not surprisingly, the software program didn’t possess a regulation license.

Though U.S. Chapter Choose Stephen St. John famous the noble targets of the entry to justice group, Upsolve, he cited Jansen v. LegalZoom.com, Inc., and wrote:

“Upsolve fails to acknowledge that the second the software program limits the choices introduced to the person based mostly upon the person’s particular traits—thus affecting the person’s discretion and decision-making—the software program supplies the person with authorized recommendation.”

The TechnoLawyer and Rule 1.1: Competence

Maybe essentially the most vital authorized ethics and know-how improvement within the Mannequin Guidelines got here in 2012 with the brand new Remark 8 to Rule 1.1: Competence.

The brand new remark supplied:

[8] To take care of the requisite data and ability, a lawyer ought to hold abreast of modifications within the regulation and its observe, together with the advantages and dangers related to related know-how, interact in persevering with research and schooling and adjust to all persevering with authorized schooling necessities to which the lawyer is topic. (Emphasis added.)

Including the advantages and dangers of related know-how was a watershed second—particularly for individuals who went to regulation college, not less than partially, to keep away from finding out know-how. Nevertheless, on the time, some commentators requested, “The place’s the meat?” as they famous this know-how “requirement” was no requirement in any respect, because it was buried within the remark—not even showing within the textual content of the rule itself.

Nevertheless, the meat manifested itself in state guidelines throughout the nation.

The states—a lot of them basing their very own ethics guidelines and opinions on the Mannequin Guidelines—embraced Remark 8, and data of know-how has develop into a requirement for many attorneys across the nation.

To maintain present on these state necessities, lawyer and journalist Bob Ambrogi, of LawSites, supplies a superb useful resource for monitoring state know-how competence necessities for attorneys and their authorized groups. The depend is now as much as 40 states.

The brand new Formal Opinion 512 on generative AI instruments additionally focuses on Rule 1.1 on competence. Legal professionals can relaxation simply in that 512 doesn’t mandate that attorneys exit and procure a PhD in knowledge science.

“To competently use a GAI software in a consumer illustration, attorneys needn’t develop into GAI specialists,” Formal Opinion 512 states.

Nevertheless, the opinion supplies additionally that “attorneys should have an inexpensive understanding of the capabilities and limitations of the precise GAI know-how that the lawyer would possibly use,” including, “This isn’t a static endeavor” and noting that attorneys ought to examine generative AI instruments focused on the authorized career, attend persevering with authorized schooling (CLE) courses, and seek the advice of with others who’re proficient in generative AI know-how.

Rule 1.1 on competence is a spotlight for the states as properly. Referencing California Rule of Skilled Conduct 1.1, which additionally has a know-how provision within the remark, the State Bar of California’s Sensible Steering for the Use of Generative Synthetic Intelligence within the Apply of Legislation notes: “It’s doable that generative AI outputs might embody info that’s false, inaccurate, or biased.”

With this threat in thoughts, the California steering supplies, “A lawyer’s skilled judgment can’t be delegated to generative AI and stays the lawyer’s duty always,” including, “a lawyer could complement any AI-generated analysis with human-performed analysis and complement any AI-generated argument with essential, human-performed evaluation and overview of authorities.”

It Takes a Village: Guidelines 5.1 and 5.3

Whether or not you’re at ILTACON, ALM’s Legalweek, Relativity Fest, or different authorized know-how conferences, it issues not in case you’re a lawyer, a paralegal, or a technologist, in case you’re a part of a authorized crew, your conduct might have an effect on the applying of those guidelines.

Rule 5.1: Obligations of Companions, Managers, and Supervisory Legal professionals and Rule 5.3: Obligations Concerning Nonlawyer Help each implicate conduct by members of authorized groups who are usually not attorneys, and the authorized ethics steering contains these professionals as properly.

“Managerial attorneys should set up clear insurance policies relating to the regulation agency’s permissible use of GAI, and supervisory attorneys should make affordable efforts to make sure that the agency’s attorneys and nonlawyers adjust to their skilled obligations when utilizing GAI instruments,” Formal Opinion 512 supplies.

To adjust to this obligation, the opinion notes, “Supervisory obligations additionally embody guaranteeing that subordinate attorneys and nonlawyers are skilled, together with within the moral and sensible use of the GAI instruments related to their work in addition to on dangers related to related GAI use.

The formal opinion suggests, “Coaching might embody the fundamentals of GAI know-how, the capabilities and limitations of the instruments, moral points in use of GAI, and greatest practices for safe knowledge dealing with, privateness, and confidentiality.”

Obligation to Disclose? Rule 1.4: Communication

Do it’s good to inform your consumer in case you’re utilizing generative AI in your authorized work on her case?

ABA Mannequin Rule of Skilled Conduct 1.4: Communications supplies, partially, {that a} lawyer shall “fairly seek the advice of with the consumer concerning the means by which the consumer’s goals are to be achieved” and that the lawyer will “promptly adjust to affordable requests for info.”

However does Rule 1.4 set off a requirement to tell your consumer about your use of ChatGPT?

In a classically lawyeresque strategy to the query, Formal Opinion 512 says mainly, “It relies upon.”

“The information of every case will decide whether or not Mannequin Rule 1.4 requires attorneys to reveal their GAI practices to shoppers or get hold of their knowledgeable consent to make use of a specific GAI software. Relying on the circumstances, consumer disclosure could also be pointless,” the opinion supplies.

Nevertheless, the opinion notes that, in some issues, the obligation to reveal using generative AI is obvious: “After all, attorneys should disclose their GAI practices if requested by a consumer how they performed their work, or whether or not GAI applied sciences had been employed in doing so, or if the consumer expressly requires disclosure below the phrases of the engagement settlement or the consumer’s exterior counsel pointers.”

Protecting Confidences and Rule 1.6: Confidentiality of Data

Legal professionals and authorized groups have few duties extra sacred than conserving consumer confidences. It’s a basic side of an adversarial authorized system, and the requirement is codified in ABA Mannequin Rule of Skilled Conduct 1.6: Confidentiality of Data.

Florida has an identical corresponding rule, Rule Regulating The Florida Bar 4-1.6. In January of this 12 months, Florida issued Florida Bar Ethics Opinion 24-1 on using generative AI, which has substantial references to Rule 4-1.6 and the requirement for confidentiality of data.

Generative AI presents new potential challenges for consumer confidentiality—relying on what kind of mannequin is used.

In A.T. v. OpenAI LP, a putative class of plaintiffs argued their knowledge privateness rights had been violated when GPT was skilled by allegedly scraping knowledge about them from varied sources with out their consent. Nevertheless, carrying this idea to consumer knowledge, some generative AI methods don’t require sharing knowledge with exterior entities.

As well as, as The Florida Bar notes, we don’t must reinvent the moral wheel right here.

“Present ethics opinions regarding cloud computing, digital storage disposal, distant paralegal providers, and metadata have addressed the duties of confidentiality and competence to prior technological improvements and are significantly instructive,” Florida’s Ethics Opinion 24-1 supplies, citing Florida Ethics Opinion 12-3, which, in flip cites New York State Bar Ethics Opinion 842 and Iowa Ethics Opinion 11-01 (Use of Software program as a Service—Cloud Computing).

No Windfall Generative AI Earnings – Rule 1.5: Charges

ABA Mannequin Rule 1.5: Charges supplies steering on applicable charges and consumer billing practices, and supplies, partially, “A lawyer shall not make an settlement for, cost, or acquire an unreasonable payment or an unreasonable quantity for bills.”

Say, for example, a authorized analysis mission legitimately took 10 hours for a reliable lawyer, however along with your snazzy new generative AI software, you’ll be able to accomplish the duty in half-hour.

Have you ever simply earned a 9.5-hour windfall? In any case, 10 hours is a respectable billing for a reliable lawyer to finish the duty.

Not precisely.

“GAI instruments could present attorneys with a quicker and extra environment friendly technique to render authorized providers

to their shoppers, however attorneys who invoice shoppers an hourly price for time spent on a matter should invoice for his or her precise time,” Formal Opinion 512 supplies.

Nevertheless, the opinion notes additionally {that a} lawyer could invoice for time to verify the generative AI work product for accuracy and completeness.

Backside line: As Opinion 512 states, citing Legal professional Grievance Comm’n v. Monfried, “A payment charged for which little or no work was carried out is an unreasonable payment.”

Why This Roadmap Issues and the Highway Forward

The Mannequin Guidelines of Skilled Conduct and their state counterparts don’t function in a vacuum. Though the feedback present steering, the principles are open to interpretation.

As we’ve seen, courts weigh in on these authorized ethics points. In maybe essentially the most well-known case of unhealthy outcomes from generative AI, final 12 months’s hallucination hijinks of submitting faux AI-generated circumstances to the court docket in Mata v. Avianca, Inc., the court docket centered extra on the lawyer’s violations of Federal Rule of Civil Process 11(b)(2) than the ethics guidelines.

Citing Muhammad v. Walmart Shops East, L.P., the Mata court docket wrote, “Underneath Rule 11, a court docket could sanction an lawyer for, amongst different issues, misrepresenting information or making frivolous authorized arguments.”

After all, the Federal Guidelines of Civil Process could have taken middle stage in Mata, however counsel sooner or later ought to concentrate on the necessities of Mannequin Rule of Skilled Conduct 3.3: Candor Towards the Tribunal.

On a extra optimistic word, this 12 months, in Snell v. United Specialty Ins. Co., U.S. Circuit Choose Kevin Newsom highlighted the optimistic impression on generative AI on the regulation, utilizing it to assist him analyze the problems and draft his concurring opinion in a choice from the U.S. Court docket of Appeals for the eleventh Circuit.

As well as, courts are reacting—some would argue overreacting—to generative AI and the regulation by issuing new standing orders and native guidelines governing using AI. EDRM has developed a useful gizmo for monitoring this proliferation of court docket orders.

However is that this veritable cornucopia of standing orders actually essential?

Choose Scott Schlegel, a Louisiana state court docket appellate choose and main jurist on know-how points, wrote convincingly final 12 months in A Name for Schooling Over Regulation: An Open Letter, saying that, in essence, this wave of judicial response could also be an answer looking for an issue.

“In my humble opinion, an order particularly prohibiting using generative AI or requiring a disclosure of its use is pointless, duplicative, and should result in unintended penalties,” Choose Schlegel wrote, arguing that lots of the present Mannequin Guidelines cited right here tackle the problem of generative AI adequately.

Trying on the highway forward—and contemplating Choose Schlegel’s name for schooling over regulation—it’s vital to notice that it’s not solely the courts, rulemakers, and governments contemplating the ethics of AI. Personal initiatives are vital as properly, going past authorized ethics into the final ethics of synthetic intelligence.

As an example, the Nationwide Academy of Medication is organizing a gaggle of medical, tutorial, company, and authorized leaders to ascertain a Well being Care Synthetic Intelligence Code of Conduct.

Along with non-governmental organizations, non-public corporations are beginning initiatives as properly.

Relativity has established Relativity’s AI Rules, which information the corporate’s efforts in synthetic intelligence in “our dedication to being a accountable steward inside our trade.” The six ideas are:

- Precept #1: We construct AI with function that delivers worth for our prospects.

- Precept #2: We empower our prospects with readability and management.

- Precept #3: We guarantee equity is entrance and middle in our AI improvement.

- Precept #4: We champion privateness all through the AI product improvement lifecycle.

- Precept #5: We place the safety of our prospects’ knowledge on the coronary heart of every part we do.

- Precept #6: We act with a excessive commonplace of accountability.

For every one among these ideas, Relativity goes into extra element. For instance, on Precept 6, referring to our inner safety crew: “Calder7’s complete techniques embody real-time defect detection, strict entry controls, correct segregation, encryption, integrity checks, and firewalls.”

The work of Relativity and others to assist guarantee the security of synthetic intelligence is vital, however maybe the priority over generative AI and the regulation is overblown.

As U.S. Justice of the Peace Choose William Matthewman, a number one jurist within the discipline of e-discovery regulation, noticed after a Relativity Fest Judicial Panel dialogue of AI, “I don’t concern synthetic intelligence. As a substitute, I look ahead to it and embrace it. That is primarily as a result of synthetic intelligence can not presumably be worse than sure ranges of human intelligence I’ve suffered over time.”