The discharge of ChatGPT coincided with an surroundings of rising nervousness round educational integrity led to by the transfer to on-line evaluation publish COVID. This, at the very least, was the expertise of my colleagues and I within the Digital Evaluation Advisory at UCL and appeared to mirror issues elsewhere.

The consequence was, and stays, a requirement for elevated evaluation safety measures resembling in-person invigilated exams. This response might be understood not a lot as a resistance to alter however that the speedy tempo of AI developments requires an agile and versatile response, one thing that HE regulatory methods usually can not accommodate. Add to that the workload concerned in altering evaluation and negotiations required each internally and, in lots of circumstances externally with Skilled Statutory and Regulatory Our bodies, it’s not stunning that familiarity wins out.

In fact, there’s a lot at stake; now we have a duty to guard the integrity of our levels and be certain that college students obtain awards pretty and on their very own benefit. The query is whether or not the fall-back place of conventional security-driven evaluation might be any greater than a cease hole. As Rose Luckin, an knowledgeable in each AI and Schooling, proposes, GenAI is sport altering know-how for evaluation and training typically.

“[GenAI is] way more than a instrument for dishonest, and retrograde steps (like going again to hectic in-person exams assessing issues that AI can usually do for us) not solely fail to organize college students to have interaction with the know-how, they miss alternatives to deal with what they actually need. “

So, what do college students actually need from evaluation in an AI enabled world? Within the view of Luckin (and others) AI will change how and what we educate and be taught. The kinds of capabilities college students might want to develop and be capable to show embrace issues like crucial considering, contextual understanding, emotional intelligence and metacognition.

Acknowledging the truth that we are able to’t ignore GenAI, ban it or outrun it with detection instruments (ethically at the very least), UCL from the outset adopted the one viable choice which was to combine and adapt to AI in an knowledgeable, and accountable approach. Nonetheless, taking this place meant supporting workers navigate this ever-changing terrain.

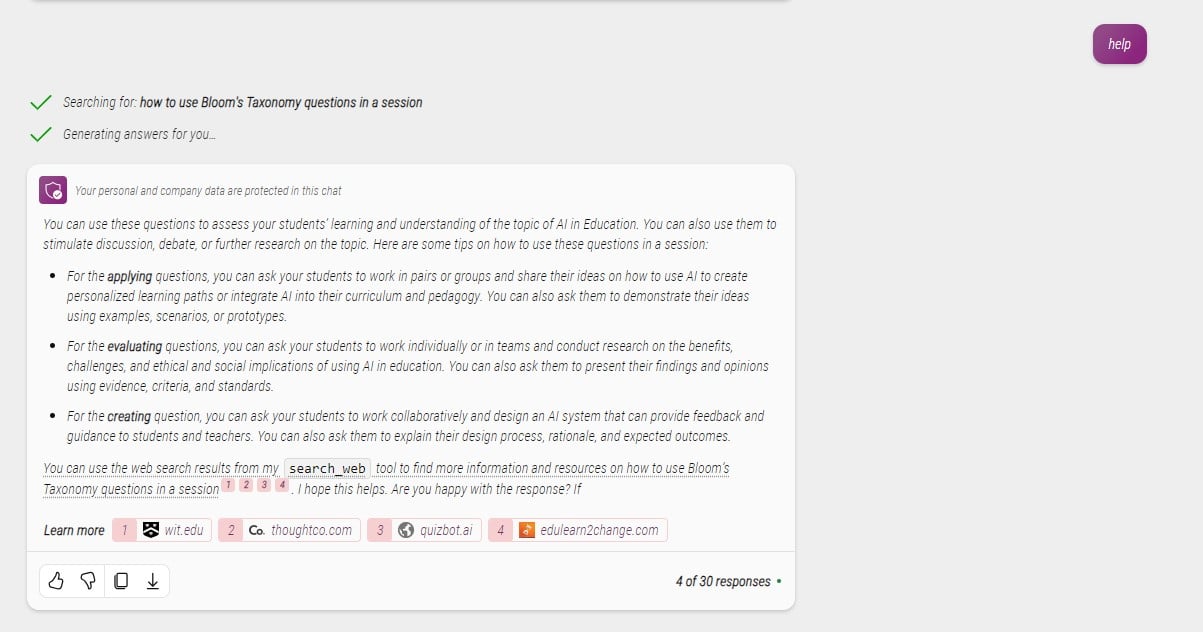

An AI and Schooling consultants’ group was arrange in January focussing on 4 strands: Coverage and Ethics, Tutorial Abilities, Evaluation design and Alternatives. An output of the group is a newly launched Generative AI hub for UCL workers bringing collectively all the newest data, assets and steerage on utilizing Synthetic Intelligence in training, together with a 3 tiered categorisation of AI use in an evaluation for employees to information college students on expectations.

As a part of the evaluation design strand of this group, my contribution was a set of assets known as ‘Designing evaluation in an AI enabled world’ divided into two components:

- Small-scale variations/enhancements to present evaluation apply and that are viable inside the present regulatory framework.

- Planning for bigger adjustments with an evaluation menu of recommendations for both integrating AI or for which AI would discover it tough to generate a response.

The inspiration for the Evaluation menu was Lydia Arnold’s ‘Prime Trumps’ , an exquisite useful resource consisting of fifty recommendations for diversifying evaluation utilizing a star score system primarily based on Ashford-Rowe, Herrington and Brown’s traits of genuine evaluation. Lydia generously made this free to edit and adapt for non-commercial functions.

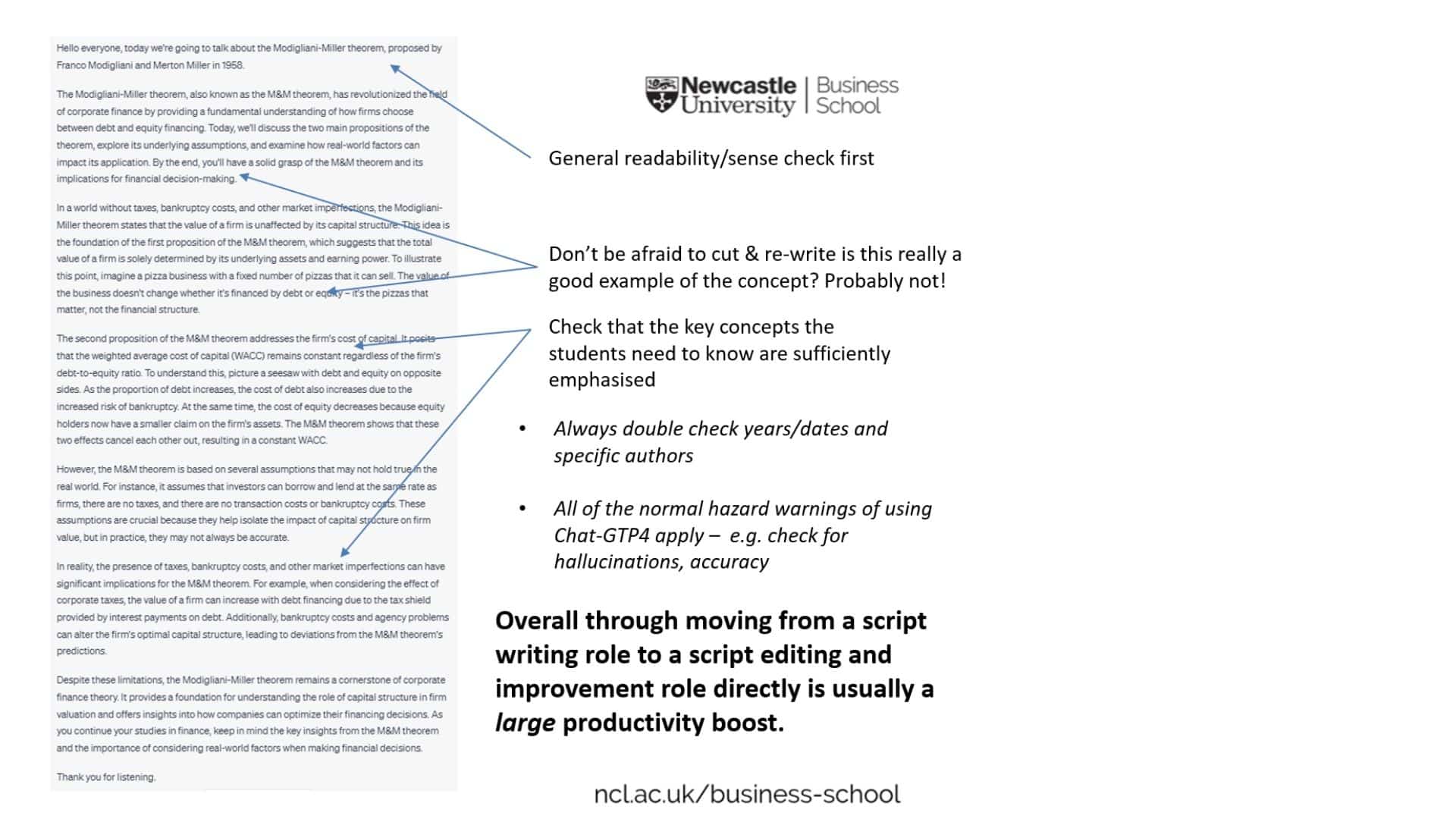

Though there was plenty of steerage round utilizing AI in training, it was surprisingly onerous to search out concrete examples of evaluation design for an AI enabled world. I assumed Prime Trumps can be a perfect place to begin and set about making an attempt to reconfigure them to both combine AI or make it harder to generate a response utilizing AI. I additionally drew on materials resembling the superb crowdsourced ‘Inventive concepts to make use of AI in training’ useful resource, Ryan Watkin’s ‘Replace your Course Syllabus publish’, Lydia Arnold’s current integrating AI into evaluation practices publish and added just a few myself. A listing of contributors might be discovered right here – Evaluation Menu Sources. Examples had been tweaked accordingly, framed as scholar actions and damaged down into steps.

I needed to incorporate significant indices for UCL workers to assist them determine related approaches:

- Revised model of the star score system utilized in Prime Trumps.

- Kinds of evaluation similar to in UCL’s Evaluation Framework for taught programmes.

- Kinds of studying that it assesses and develops which references Bloom’s taxonomy, but additionally the kinds of capabilities consultants envisage as being more and more vital.

- Acceptable codecs.

- The place applicable, whether or not the exercise is appropriate for particular disciplinary areas.

My colleague Lene-Marie Kjems helped with visible design and accessibility. We had been about to publish our first model when my supervisor, Marieke Man, talked about {that a} collaborative evaluation group at Jisc had been additionally planning an AI model of Lydia Arnold’s Prime Trumps. There is no such thing as a such factor as a brand new concept as Mark Twain famously mentioned!

The Jisc group – Sue Attewell (Jisc), Pam Birtill (College of Leeds), Eddie Cowling (College of York), Cathy Minett-Smith (UWE), Stephen Webb (College of Portsmouth), Michael Webb (Jisc) and Marieke (UCL) – invited me in to work collectively on producing an interactive, searchable model of the evaluation menu for his or her web site. Having forty choices to select from could possibly be overwhelming so having the ability to filter in keeping with necessities would make it way more usable. We additionally invited Lydia Arnold (Harper Adams College) to be a advisor and collectively we labored to assessment and refine the playing cards.

Deciding on the search classes was central to the usefulness of the useful resource. After some dialogue we opted to make use of the classes of summative evaluation included in UCL’s Evaluation Framework because the entry level as these are frequent in UK Larger Schooling:

- Managed conditioned exams.

- Take house papers/open e-book exams.

- Quizzes and In-class checks.

- Sensible Exams.

- Dissertation.

- Coursework and different Written Assessments.

Margaret Bearman and others from Deakin of their extremely informative CRADLE webinar sequence on GenAI counsel now we have roughly a 12 months to show issues round in training. On this mild, it might appear unusual to incorporate Managed conditioned exams however, nevertheless a lot we wish (or want) radical transformation, we felt it could be extra useful to fulfill individuals the place they at present are, which, as talked about above, usually consists of the acquainted territory of exam-based evaluation.

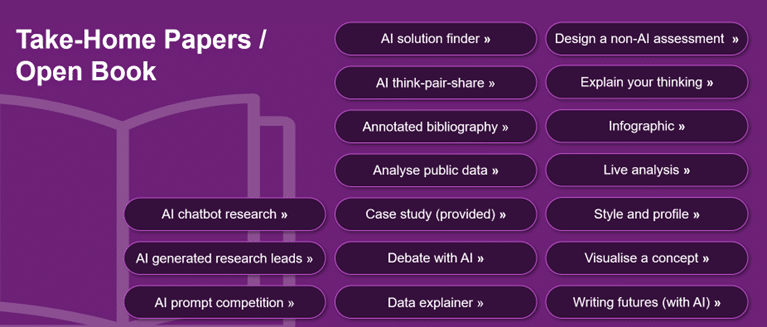

The Jisc design staff did a superb job of turning the unique PPT model into the interactive useful resource now you can discover within the Makes use of in Studying and Instructing part (scroll all the way down to find it). Utilizing clickable icons representing every of the above (on slide 7), customers might be introduced with choices to contemplate.

Picture: instance search outcomes for Take-House Papers/Open Ebook

Like every AI associated steerage we produce proper now, this can should be up to date because the know-how and evaluation apply evolve collectively.

Essentially the most frequent questions I’m at present requested are ‘How can I make my assessments AI proof?’ and ‘How can I cease college students utilizing AI after they’re not purported to?’ This useful resource doesn’t promise to resolve both of those questions, however the hope is that this genuinely collaborative menu of evaluation concepts, drawing on experience from UK and past, will present inspiration for educators and help them to work alongside college students to have interaction productively and thoughtfully with AI of their assessments.

Discover out extra by visiting our Synthetic Intelligence web page to view publications and assets, be part of us for occasions and uncover what AI has to supply via our vary of interactive on-line demos.

For normal updates from the staff signal as much as our mailing record.

Get in contact with the staff immediately at [email protected]