Picture by Creator

Okay-Means clustering is likely one of the mostly used unsupervised studying algorithms in knowledge science. It’s used to mechanically section datasets into clusters or teams based mostly on similarities between knowledge factors.

On this brief tutorial, we are going to find out how the Okay-Means clustering algorithm works and apply it to actual knowledge utilizing scikit-learn. Moreover, we are going to visualize the outcomes to know the information distribution.

What’s Okay-Means Clustering?

Okay-Means clustering is an unsupervised machine studying algorithm that’s used to resolve clustering issues. The purpose of this algorithm is to seek out teams or clusters within the knowledge, with the variety of clusters represented by the variable Okay.

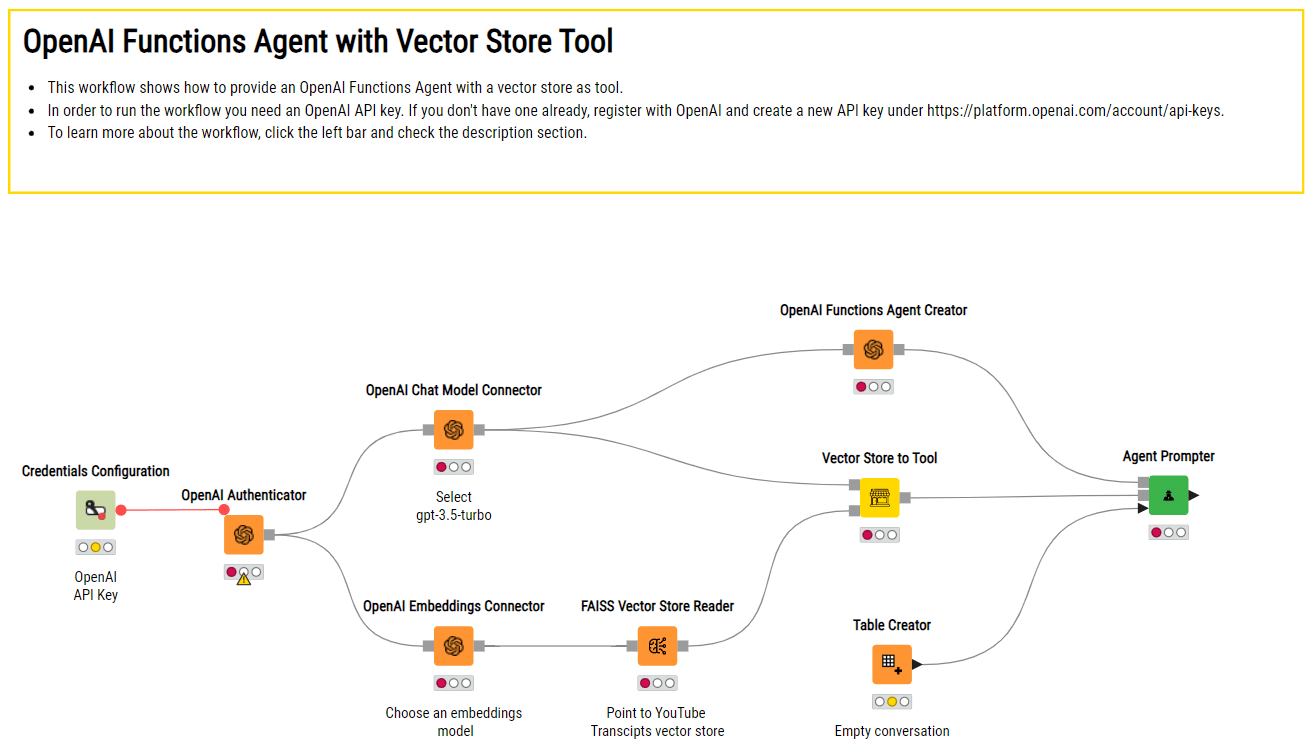

The Okay-Means algorithm works as follows:

- Specify the variety of clusters Okay that you really want the information to be grouped into.

- Randomly initialize Okay cluster facilities or centroids. This may be finished by randomly choosing Okay knowledge factors to be the preliminary centroids.

- Assign every knowledge level to the closest cluster centroid based mostly on Euclidean distance. The information factors closest to a given centroid are thought of a part of that cluster.

- Recompute the cluster centroids by taking the imply of all knowledge factors assigned to that cluster.

- Repeat steps 3 and 4 till the centroids cease transferring or the iterations attain a specified restrict. That is finished when the algorithm has converged.

Gif by Alan Jeffares

The target of Okay-Means is to attenuate the sum of squared distances between knowledge factors and their assigned cluster centroid. That is achieved by iteratively reassigning knowledge factors to the closest centroid and transferring the centroids to the middle of their assigned factors, leading to extra compact and separated clusters.

Okay-Means Clustering Actual-World Instance

In these examples, we are going to use Mall Buyer Segmentation knowledge from Kaggle and apply the Okay-Means algorithm. We may also discover the optimum variety of Okay (clusters) utilizing the Elbow methodology and visualize the clusters.

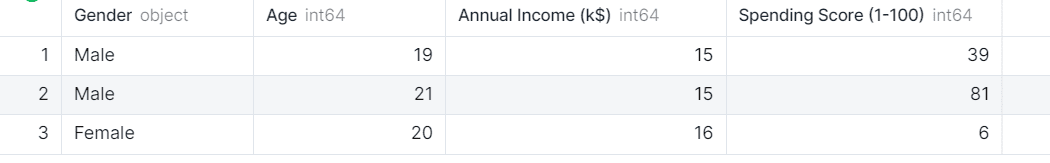

Knowledge Loading

We’ll load a CSV file utilizing pandas and make “CustomerID” as an index.

import pandas as pd

df_mall = pd.read_csv("Mall_Customers.csv",index_col="CustomerID")

df_mall.head(3)

The information set has 4 columns and we’re thinking about solely three: Age, Annual Revenue, and Spending Rating of the shoppers.

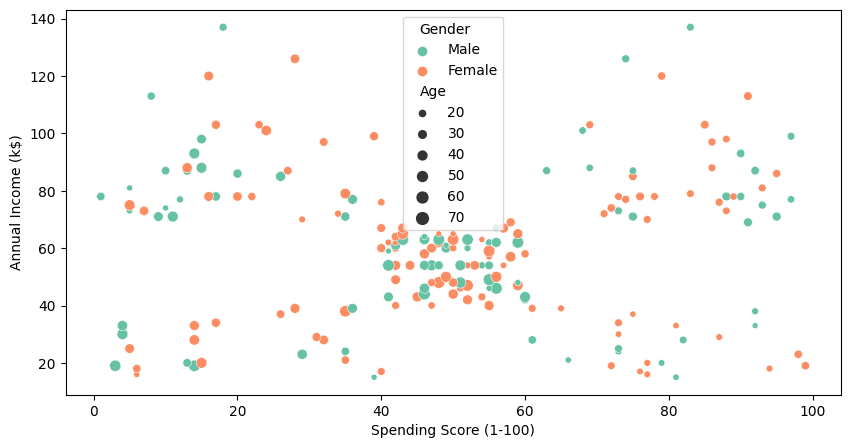

Visualization

To visualise all 4 columns, we are going to use seaborn’s `scatterplot` .

import matplotlib.pyplot as plt

import seaborn as sns

plt.determine(1 , figsize = (10 , 5) )

sns.scatterplot(

knowledge=df_mall,

x="Spending Rating (1-100)",

y="Annual Revenue (okay$)",

hue="Gender",

measurement="Age",

palette="Set2"

);

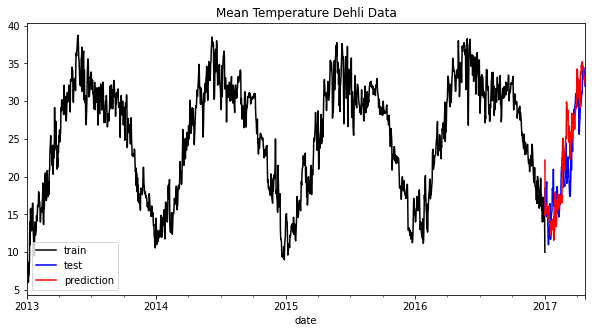

Even with out Okay-Means clustering, we are able to clearly see the cluster in between 40-60 spending rating and 40k to 70k annual revenue. To search out extra clusters, we are going to use the clustering algorithm within the subsequent half.

Normalizing

Earlier than making use of a clustering algorithm, it is essential to normalize the information to eradicate any outliers or anomalies. We’re dropping the “Gender” and “Age” columns and shall be utilizing the remainder of them to seek out the clusters.

from sklearn import preprocessing

X = df_mall.drop(["Gender","Age"],axis=1)

X_norm = preprocessing.normalize(X)

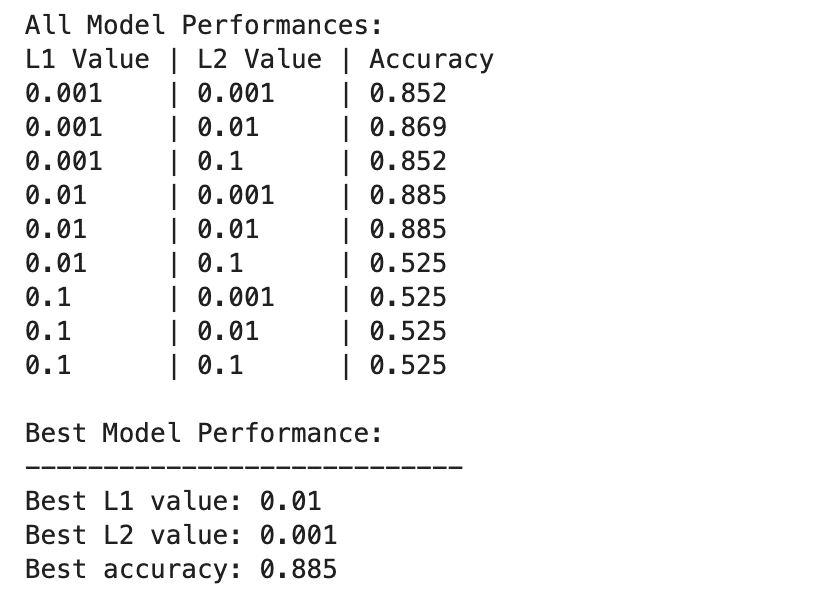

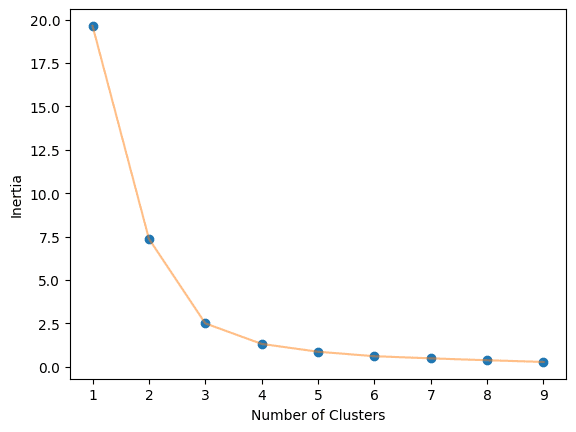

Elbow Technique

The optimum worth of Okay within the Okay-Means algorithm will be discovered utilizing the Elbow methodology. This includes discovering the inertia worth of each Okay variety of clusters from 1-10 and visualizing it.

import numpy as np

from sklearn.cluster import KMeans

def elbow_plot(knowledge,clusters):

inertia = []

for n in vary(1, clusters):

algorithm = KMeans(

n_clusters=n,

init="k-means++",

random_state=125,

)

algorithm.match(knowledge)

inertia.append(algorithm.inertia_)

# Plot

plt.plot(np.arange(1 , clusters) , inertia , 'o')

plt.plot(np.arange(1 , clusters) , inertia , '-' , alpha = 0.5)

plt.xlabel('Variety of Clusters') , plt.ylabel('Inertia')

plt.present();

elbow_plot(X_norm,10)

We obtained an optimum worth of three.

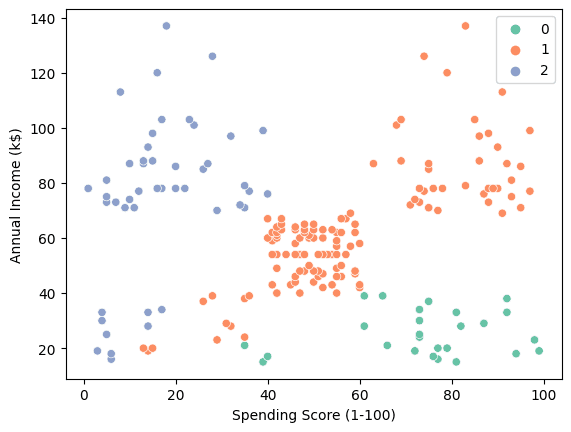

KMeans Clustering

We’ll now use KMeans algorithm from scikit-learn and supply it the Okay worth. After that we are going to match it on our coaching dataset and get cluster labels.

algorithm = KMeans(n_clusters=3, init="k-means++", random_state=125)

algorithm.match(X_norm)

labels = algorithm.labels_

We are able to use scatterplot to visualise the three clusters.

sns.scatterplot(knowledge = X, x = 'Spending Rating (1-100)', y = 'Annual Revenue (okay$)', hue = labels, palette="Set2");

- “0”: From excessive spender with low annual revenue.

- “1”: Common to excessive spender with medium to excessive annual revenue.

- “2”: From Low spender with Excessive annual revenue.

This perception can be utilized to create customized advertisements, rising buyer loyalty and boosting income.

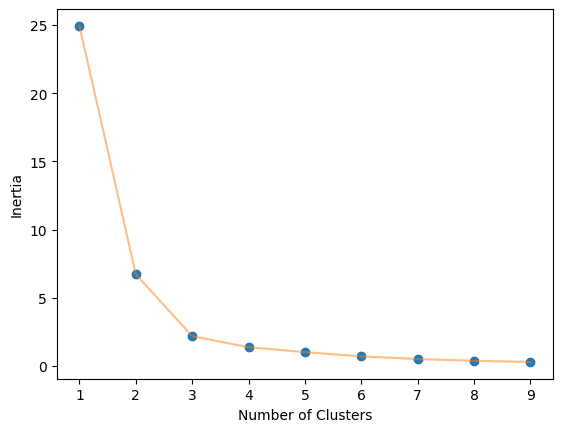

Utilizing completely different options

Now, we are going to use Age and Spending Rating because the function for the clustering algorithm. It is going to give us an entire image of buyer distribution. We’ll repeat the method of normalizing the information.

X = df_mall.drop(["Gender","Annual Income (k$)"],axis=1)

X_norm = preprocessing.normalize(X)

Calculate the optimum variety of clusters.

elbow_plot(X_norm,10)

Prepare the Okay-Means algorithm on Okay=3 clusters.

algorithm = KMeans(n_clusters=3, init="k-means++", random_state=125)

algorithm.match(X_norm)

labels = algorithm.labels_

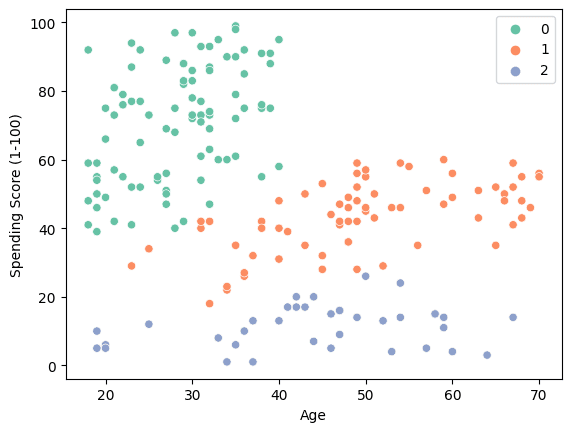

Use a scatter plot to visualise the three clusters.

sns.scatterplot(knowledge = X, x = 'Age', y = 'Spending Rating (1-100)', hue = labels, palette="Set2");

- “0”: Younger Excessive spender.

- “1”: Medium spender from center age to previous ages.

- “2”: Low spenders.

The consequence means that corporations can enhance income by focusing on people aged 20-40 with disposable revenue.

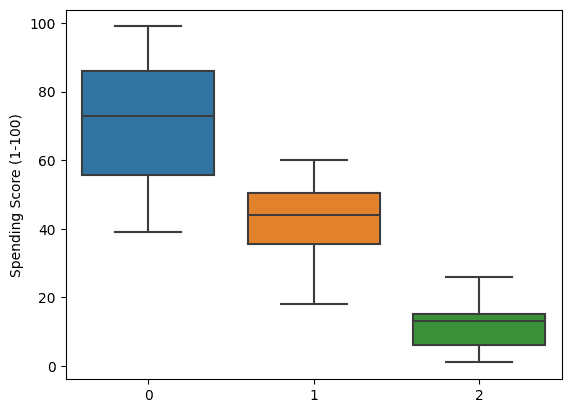

We are able to even go deep by visualizing the boxplot of spending scores. It clearly reveals that the clusters are shaped based mostly on spending habits.

sns.boxplot(x = labels, y = X['Spending Score (1-100)']);

Conclusion

On this Okay-Means clustering tutorial, we explored how the Okay-Means algorithm will be utilized for buyer segmentation to allow focused promoting. Although Okay-Means shouldn’t be an ideal, catch-all clustering algorithm, it supplies a easy and efficient strategy for a lot of real-world use circumstances.

By strolling by means of the Okay-Means workflow and implementing it in Python, we gained perception into how the algorithm capabilities to partition knowledge into distinct clusters. We discovered strategies like discovering the optimum variety of clusters with the elbow methodology and visualizing the clustered knowledge.

Whereas scikit-learn supplies many different clustering algorithms, Okay-Means stands out for its pace, scalability, and ease of interpretation.

Abid Ali Awan (@1abidaliawan) is an authorized knowledge scientist skilled who loves constructing machine studying fashions. At present, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in Know-how Administration and a bachelor’s diploma in Telecommunication Engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students battling psychological sickness.