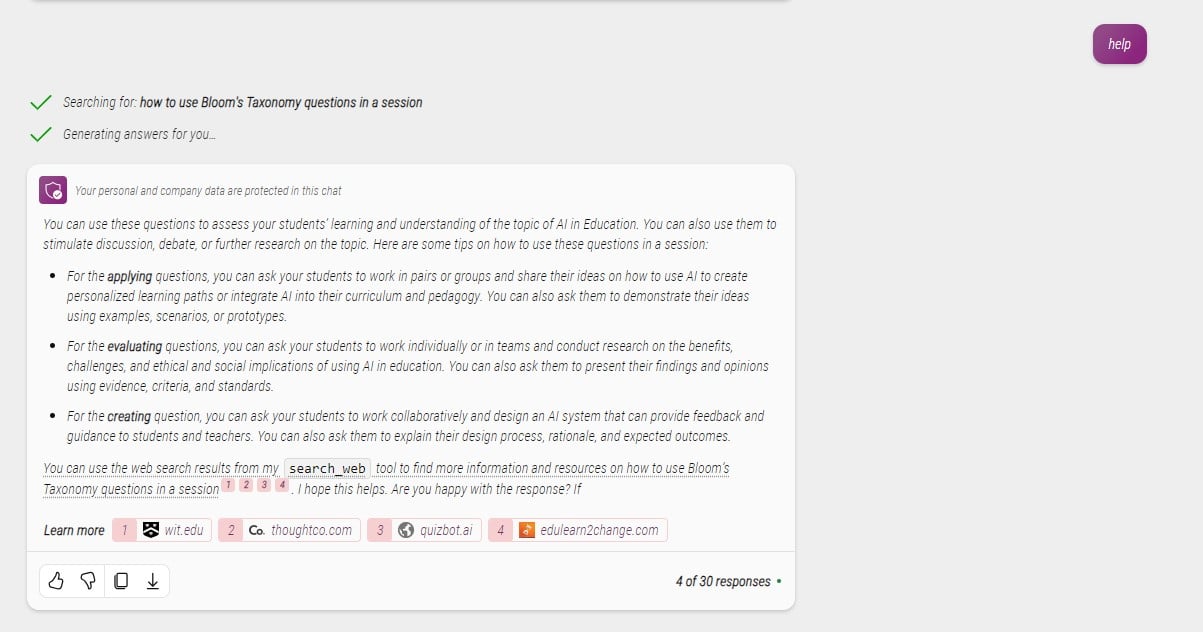

Google’s Generative AI work has maybe tended to slide a little bit beneath the radar, no less than in discussions I’ve been concerned in, which have tended to deal with ChatGPT and Copilot. They’ve simply put out lots of new data although, together with, for me, in all probability probably the most fascinating paper on LLMs in training for fairly a while – In the direction of Accountable Improvement of Generative AI for Schooling: An Analysis-Pushed Strategy

The paper is sort of an extended learn, so I assumed it could be useful to summarise a few of the details and why I discovered them fascinating. Earlier than that although I simply wish to take a fast detour into considered one of their different bulletins – Illuminate.

Illuminate

That is solely a demo, but it surely turns analysis papers into discussions, like mini podcasts. The primary demo is for ‘All You Want is Consideration’ – the seminar paper introducing transformers, that are one of many key foundations of the generative AI we see at this time. I discovered this an effective way of getting a refresher on the paper. I’d like to see how effectively it really works outdoors of this demo. For the time being there’s a ready record to get in. I can see why you would possibly say ‘Simply learn the paper’, however personally I can see this as an effective way of creating analysis extra accessible.

LearnLM-Tutor

The principle issues I wish to take a look at although is LearnLMTutor – a big language mannequin fine-tuned for training. We’d heard related issues round OpenAI’s work with Khan Academy, however there we had no data on how they have been really doing it. Google lifts the lid a little bit, and of their paper, describe each how they tune their mannequin, and the way they consider it. It’s effectively value understanding this – it’s not too techie, and, I feel, fairly fascinating. It factors to some issues we as tertiary educations sector ought to be participating in.

Let’s begin with what we is perhaps making an attempt to attain right here. Google appears to be two use instances: marking and suggestions, and as a dialogue tutor.

Studying Instance:

Learner: I wish to know extra about cash provide. I already know the fundamentals. I wish to study what’s past AP economics

LearnLM-Tutor: Certain, I may also help with that. Let’s take a look at your understanding of the equation of trade. Are you able to inform me the formulation for the equation of trade?

So LearnLM engages by beginning a dialogue with the person, on this case to see the place they’re. That’s fairly completely different to a normal LLM. Of their paper Google present the Gemini response, however I’m going to check to ChatGPT with no further prompting:

ChatGPT: “Understanding cash provide past the fundamentals of AP Economics entails delving into extra superior ideas, theories, and the implications of various kinds of cash provide measures. Right here’s a deeper look into the subject:… (goes on to inform me masses about it).

So it’s simply moved straight to ‘data giving’ mode, which I’d anticipate , however not from one thing appearing as a tutor.

Right here’s an instance on suggestions:

College students Reply: “Dissolving salt in water offers us a negatively charged sodium ion and a positively charged Chlorine ion”

LearnLMTutor Suggestions: Truly, sodium loses its one valence electron to chlorine, giving sodium a optimistic cost and chlorine a damaging cost. What else are you aware about this course of?

So LearnLM begins to interact with college students to assist them work via to the answer. Once more, if we examine to ChatGPT with no further prompting:

ChatGPT: “It appears there’s a small mix-up within the description of the ions fashioned when salt (sodium chloride, NaCl) dissolves in water. (describe in additional element the right reply).

How are they doing this:

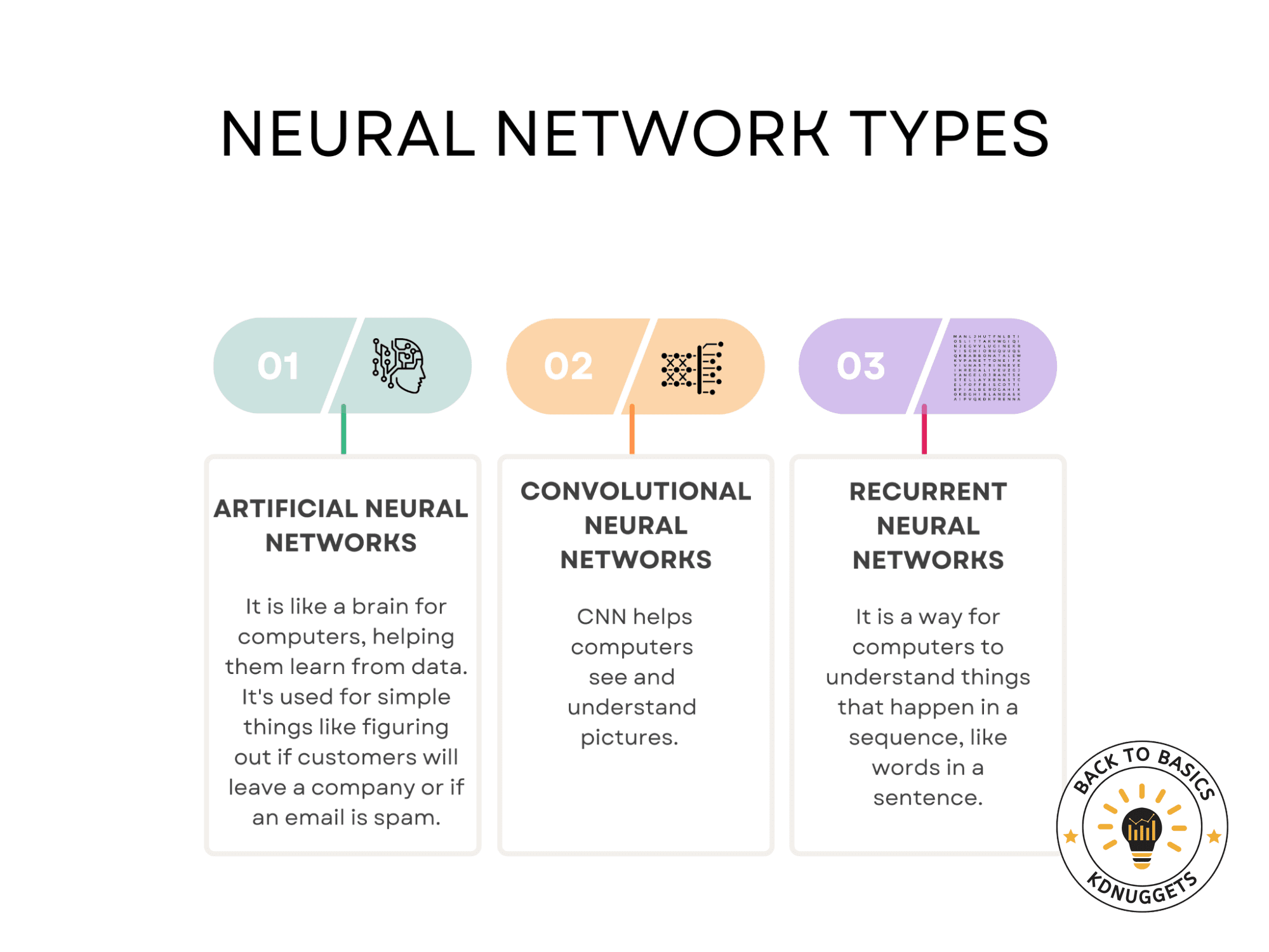

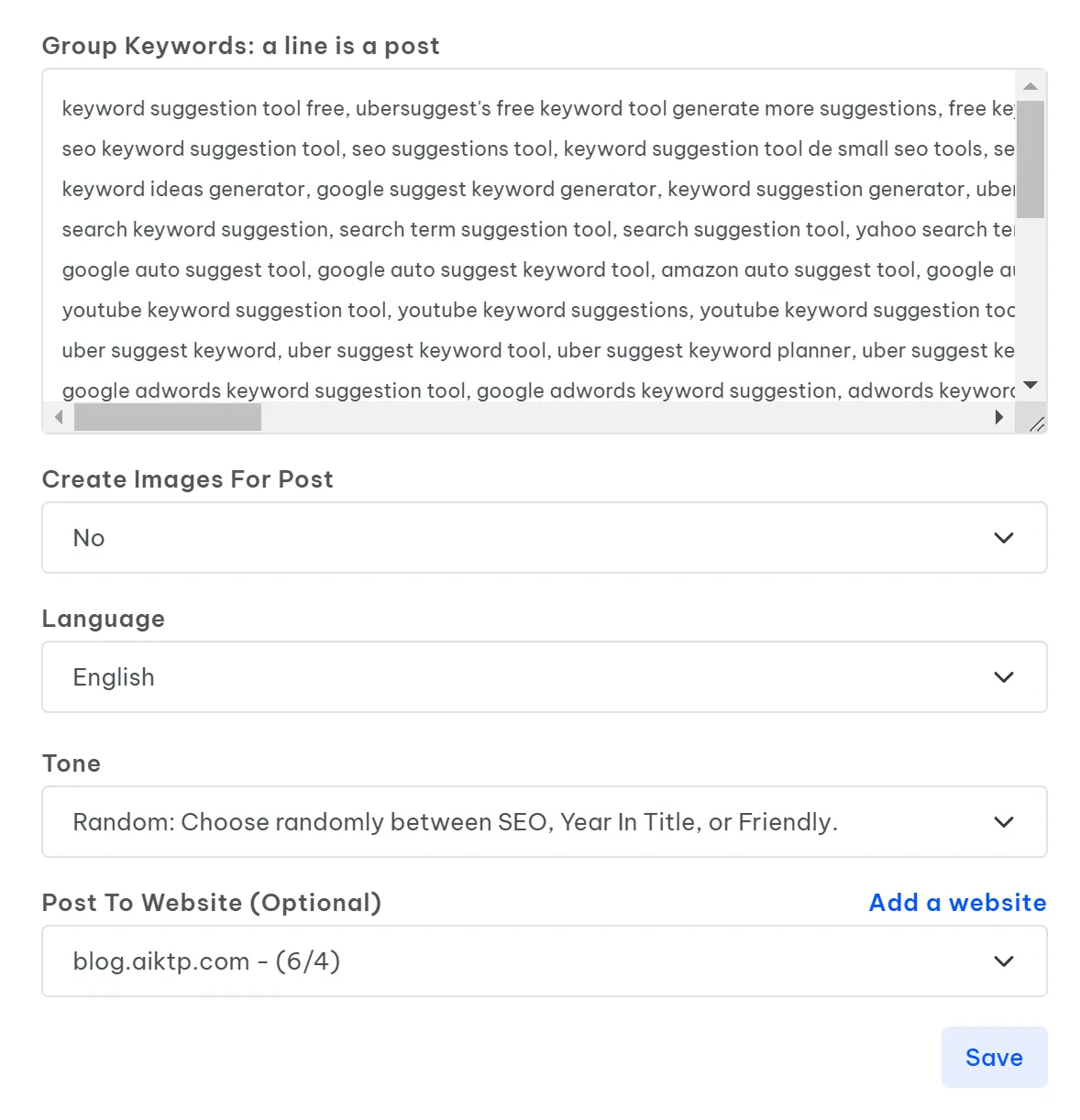

So some fundamentals first. Within the training area, we’ve broadly seen two approaches to creating academic AI chatbots, normally mixed:

- Prompting

- Retrieval Augmentation Era

With prompting we describe the conduct of the AI chatbot. We’re used to seeing primary examples alongside the strains of:

“You’re an professional in pc coding, please act as a useful tutor for college students new to Python”

We’ve additionally seen many examples of prompting, getting ChatGPT too, for instance act as Socratic Opponent.

Builders will usually embrace rather more complicated prompts within the programs they construct. Prompts can run to many pages describing the behaviour intimately. The issue with making a general-purpose AI tutor by way of prompting is that it’s actually, actually onerous to place into phrases the precise behaviour of a very good tutor, and there are not any well-defined, universally agreed pedagogical greatest practices.

Retrieval Augmentation Era

Retrieval Augmentation Era (RAG) is utilized by Gen AI chatbots to supply responses based mostly on a sure set of knowledge. I’ve talked about it extra in one other weblog publish. It doesn’t change the behaviours of the Chatbot although, simply the sources of knowledge it makes use of for responses, so will likely be mixed with prompting if we wish it to behavior in a selected, tutor-like means. I point out this, because it’s usually a part of the combo folks focus on when creating AI tutors.

LearnLM and fine-tuning.

Google takes a special method with LearnLM, which is to fine-tune the mannequin. This implies taking an present mannequin (on this case their Gemini mannequin) and offering it with further coaching knowledge to alter the precise mannequin. A part of the explanation we don’t see a lot of that is that it’s costly and sophisticated to do. Not as complicated as coaching a mannequin from scratch, however nonetheless not low-cost. The opposite problem is you want an information set to make use of to superb tune the mannequin, and as Google notes, there are not any present knowledge units that outline ‘a very good tutor’. They find yourself defining their very own, which appears like this:

- Human tutors: They collected conversations between human learners and college students, carried out although a textual content interface. The individuals have been paid for this.

- Gen AI role-play: An artificial set generated by Gen AI taking part in each the tutor and learner. They tried to make this handy by involving people within the prompting, and by manually filtering and modifying the information set. This would possibly really feel a bit unusual as an concept, however artificial units like this are more and more widespread.

- GSM8k (Grade College Maths) based mostly: There’s an present knowledge set based mostly round textual content questions for grade college maths within the US, which they changed into learner/tutor conversations.

- Golden conversations: They labored with lecturers to create a small variety of high-quality instance conversations for pedagogical behaviours they needed the mannequin to study, which they observe have been very labour intensive to create, however strike me as one thing we as a sector might contribute to.

- Security: Conversations that information protected responses. These have been handwritten by the staff, or based mostly on failures seen by the take a look at staff, and coated dangerous conversations. The paper doesn’t (I feel) describe this in additional element.

Google ended up with a dataset of mannequin conversations, which they might then use to superb tune their mannequin, to get it to behave like a tutor.

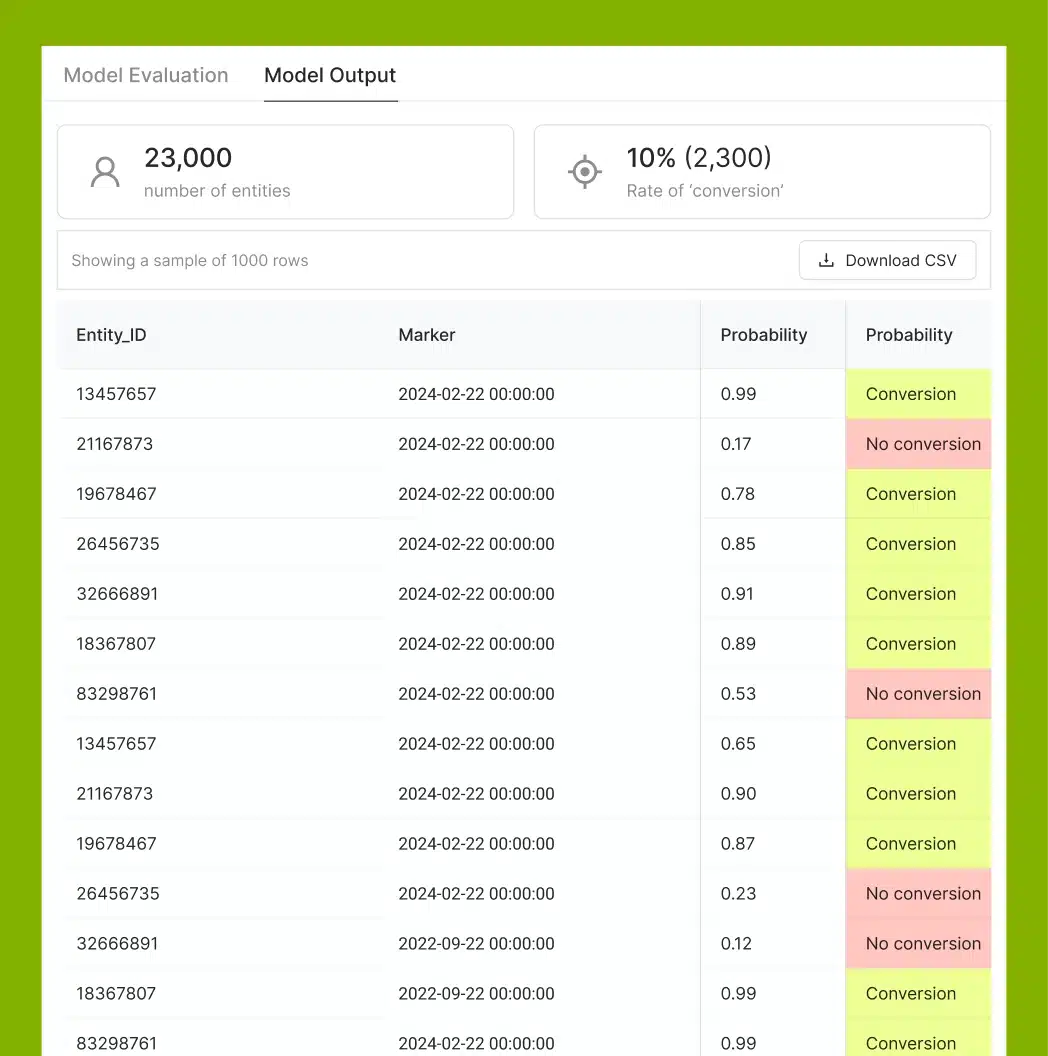

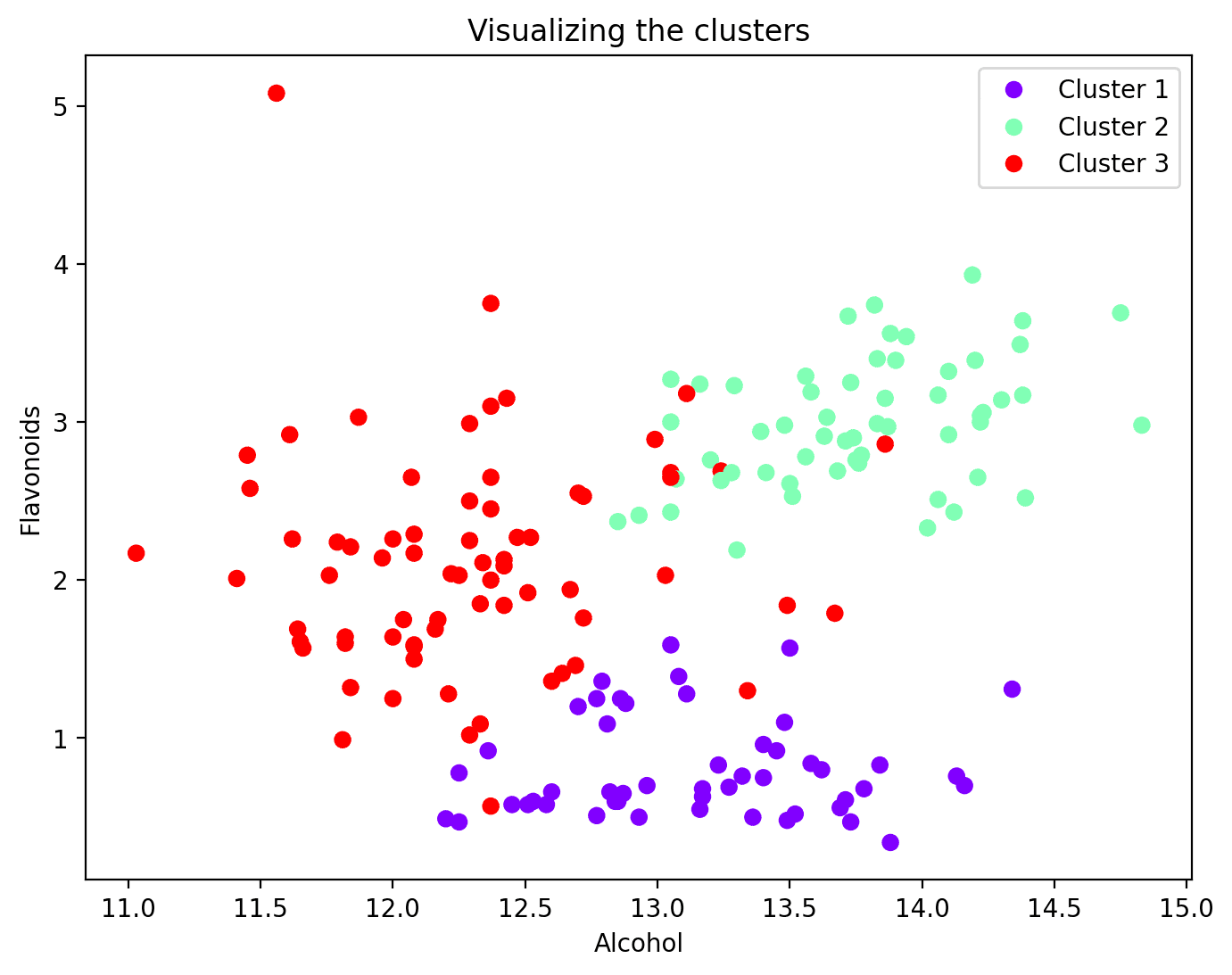

Did it work?

Briefly, sure, it created a greater LM than an out-of-the field one, based mostly on their analysis. A big chunk of the paper goes into element on the analysis of the mannequin. I’m not going to enter the element of the outcomes, however I’ll share a number of fascinating bits.

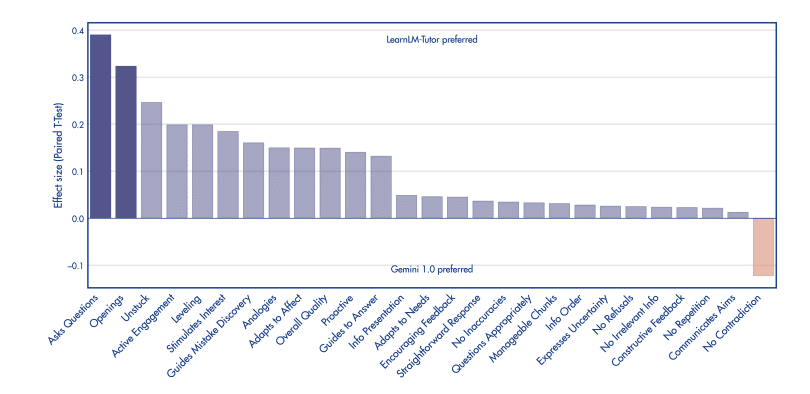

First, they obtained people specialists to judge the output from LearnML and normal Gemini. It’s not stunning actually that LearningLM got here out on high, however I assumed it fascinating to share the issues the evaluated:

It’s fascinating to notice they they then used an LLM to judge the standard of the interactions. It’s changing into well-known that LLMs are literally fairly good at marking their very own homework. Do that your self, by asking your Chatbot of option to do one thing, after which afterwards because it to replicate on how good its reply was. By way of a strategy of iteration, they obtained a course of that correlated carefully with human analysis. Which means we will probably create a repeatable framework for evaluating AI tutors.

It’s fascinating to notice they they then used an LLM to judge the standard of the interactions. It’s changing into well-known that LLMs are literally fairly good at marking their very own homework. Do that your self, by asking your Chatbot of option to do one thing, after which afterwards because it to replicate on how good its reply was. By way of a strategy of iteration, they obtained a course of that correlated carefully with human analysis. Which means we will probably create a repeatable framework for evaluating AI tutors.

There’s much more within the paper in regards to the element of the analysis, and it’s fairly readable, so I’ve peaked your it’s now value heading over and studying the total paper.

So what does this imply?

I do know the concept of AI replicating some components of the perform of a tutor isn’t that comfy, and there are complete rafts of the extra human facets, resembling emotional intelligence, that this work doesn’t go close to. But additionally, we all know many college students worth AI for studying. They worth its availability, persistence, and lack of perceived judgement (‘no such factor as silly questions with AI’). It’s additionally virtually sure we’re going to see many merchandise constructed on this, and marketed on to college students, both as examine aids, or as a part of a whole on-line course.

So, let’s recap the place this work factors to, if we wish to create higher AI tutors.

- We aren’t going to create nice AI tutors by making an attempt to explain what that behaviour appears like (prompting).

- We’d succeed by offering heaps and many examples of ‘good behaviour’ (fine-tuning).

Tremendous-tuning an AI tutor might be outdoors of the attain of most establishments, so we’re going to must depend on huge tech corporations to do it for us. That’s probably lots of affect. How can we as a sector steer this? Prospects embrace:

- Absolutely participating with any alternative to be a part of the event and analysis course of?

- By creating and making obtainable top quality knowledge, conversational units that may very well be a part of the coaching of those fashions?

Are these viable, and are they issues we really wish to do? Way more debate and dialogue is required on this. I’m positive there will even be loads of dialogue in regards to the sector fine-tuning their very own mannequin. Technically, this will nearly possible, but it surely’s not simply in regards to the mannequin, it’s about all of the instruments and companies which are constructed on high, and to me this actually doesn’t really feel like a viable course – we stopped constructing our personal inside programs a very long time in the past for good causes.

Within the meantime, the decision to motion needs to be to control all of the gamers on this area, not simply Microsoft/OpenAI.

Discover out extra by visiting our Synthetic Intelligence web page to view publications and sources, be part of us for occasions and uncover what AI has to supply via our vary of interactive on-line demos.

For normal updates from the staff signal as much as our mailing record.

Get in contact with the staff immediately at [email protected]