Hyperparameters decide how properly your neural community learns and processes data. Mannequin parameters are realized throughout coaching. Not like these parameters, hyperparameters have to be set earlier than the coaching course of begins. On this article, we are going to describe the strategies for optimizing the hyperparameters within the fashions.

Hyperparameters In Neural Networks

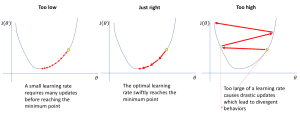

Studying Charge

The training charge tells the mannequin how a lot to vary primarily based on its errors. If the educational charge is excessive, the mannequin learns rapidly however would possibly make errors. If the educational charge is low, the mannequin learns slowly however extra rigorously. This results in much less errors and higher accuracy.

Supply: https://www.jeremyjordan.me/nn-learning-rate/

There are methods of adjusting the educational charge to realize the most effective outcomes doable. This includes adjusting the educational charge at predefined intervals throughout coaching. Moreover, optimizers just like the Adam permits a self-tuning of the educational charge in line with the execution of the coaching.

Batch Dimension

Batch measurement is the variety of coaching samples a mannequin undergoes at a given time. A big batch measurement principally signifies that the mannequin goes by extra samples earlier than the parameter replace. It will possibly result in extra secure studying however requires extra reminiscence. A smaller batch measurement however updates the mannequin extra steadily. On this case, studying may be quicker however it has extra variation in every replace.

The worth of the batch measurement impacts reminiscence and processing time for studying.

Variety of Epochs

Epochs refers back to the variety of instances a mannequin goes by the whole dataset throughout coaching. An epoch consists of a number of cycles the place all the information batches are proven to the mannequin, it learns from it, and optimizes its parameters. Extra epochs are higher in studying the mannequin but when not properly noticed they may end up in overfitting. Deciding the right variety of epochs is critical to realize accuracy. Methods like early stopping are generally used to search out this steadiness.

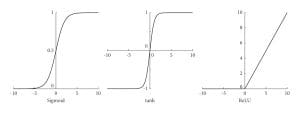

Activation Perform

Activation capabilities determine whether or not a neuron ought to be activated or not. This results in non-linearity within the mannequin. This non-linearity is useful particularly whereas making an attempt to mannequin advanced interactions within the knowledge.

Supply: https://www.researchgate.internet/publication/354971308/determine/fig1/AS:1080246367457377@1634562212739/Curves-of-the-Sigmoid-Tanh-and-ReLu-activation-functions.jpg

Frequent activation capabilities embody ReLU, Sigmoid and Tanh. ReLU makes the coaching of neural networks quicker because it permits solely the optimistic activations in neurons. Sigmoid is used for assigning chances because it outputs a worth between 0 and 1. Tanh is advantageous particularly when one doesn’t need to use the entire scale which ranges from 0 to ± infinity. The collection of a proper activation perform requires cautious consideration because it dictates whether or not the community shall be capable to make prediction or not.

Dropout

Dropout is a method which is used to keep away from overfitting of the mannequin. It randomly deactivates or “drops out” some neurons by setting their outputs to zero throughout every coaching iteration. This course of prevents neurons from relying too closely on particular inputs, options, or different neurons. By discarding the results of particular neurons, dropout helps the community to give attention to important options within the course of of coaching. Dropout is generally applied throughout coaching whereas it’s disabled within the inference section.

Hyperparameter Tuning Methods

Handbook Search

This methodology includes trial and error of values for parameters that decide how the educational means of a machine studying mannequin is completed. These settings are adjusted separately to watch the way it influences the mannequin’s efficiency. Let’s attempt to change the settings manually to get higher accuracy.

learning_rate = 0.01

batch_size = 64

num_layers = 4

mannequin = Mannequin(learning_rate=learning_rate, batch_size=batch_size, num_layers=num_layers)

mannequin.match(X_train, y_train)

Handbook search is straightforward as a result of you don’t require any sophisticated algorithms to manually set parameters for testing. Nevertheless, it has a number of disadvantages as in comparison with different strategies. It will possibly take a variety of time and it could not discover the perfect settings effectively than the automated strategies

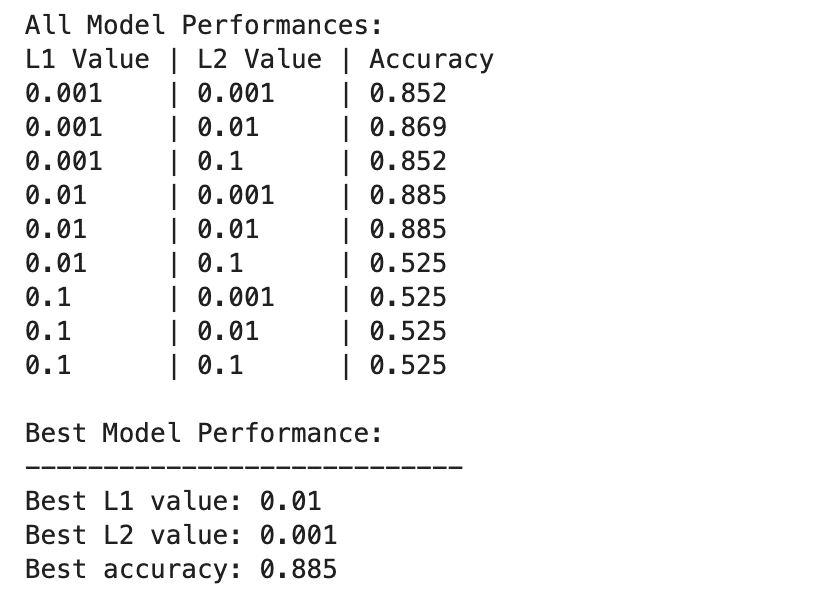

Grid Search

Grid search checks many alternative mixtures of hyperparameters to search out the most effective ones. It trains the mannequin on a part of the information. After that, it checks how properly it does with one other half. Let’s implement grid search utilizing GridSearchCV to search out the most effective mannequin .

from sklearn.model_selection import GridSearchCV

param_grid = {

'learning_rate': [0.001, 0.01, 0.1],

'batch_size': [32, 64, 128],

'num_layers': [2, 4, 8]

}

grid_search = GridSearchCV(mannequin, param_grid, cv=5)

grid_search.match(X_train, y_train)

Grid search is far quicker than handbook search. Nevertheless, it’s computationally costly as a result of it takes time to verify each doable mixture.

Random Search

This system randomly selects mixtures of hyperparameters to search out probably the most environment friendly mannequin. For every random mixture, it trains the mannequin and checks how properly it performs. On this approach, it will possibly rapidly arrive at good settings that trigger the mannequin to carry out higher. We are able to implement random search utilizing RandomizedSearchCV to realize the most effective mannequin on the coaching knowledge.

from sklearn.model_selection import RandomizedSearchCV

from scipy.stats import uniform, randint

param_dist = {

'learning_rate': uniform(0.001, 0.1),

'batch_size': randint(32, 129),

'num_layers': randint(2, 9)

}

random_search = RandomizedSearchCV(mannequin, param_distributions=param_dist, n_iter=10, cv=5)

random_search.match(X_train, y_train)

Random search is often higher than the grid search since just a few variety of hyperparameters are checked to get appropriate hyperparameters settings. Nonetheless, it won’t search the right mixture of hyperparameters significantly when the working hyperparameters area is giant.

Wrapping Up

We have coated a number of the fundamental hyperparameter tuning strategies. Superior strategies embody Bayesian Optimization, Genetic Algorithms and Hyperband.

Jayita Gulati is a machine studying fanatic and technical author pushed by her ardour for constructing machine studying fashions. She holds a Grasp’s diploma in Laptop Science from the College of Liverpool.