Picture generated with DALL-E 3

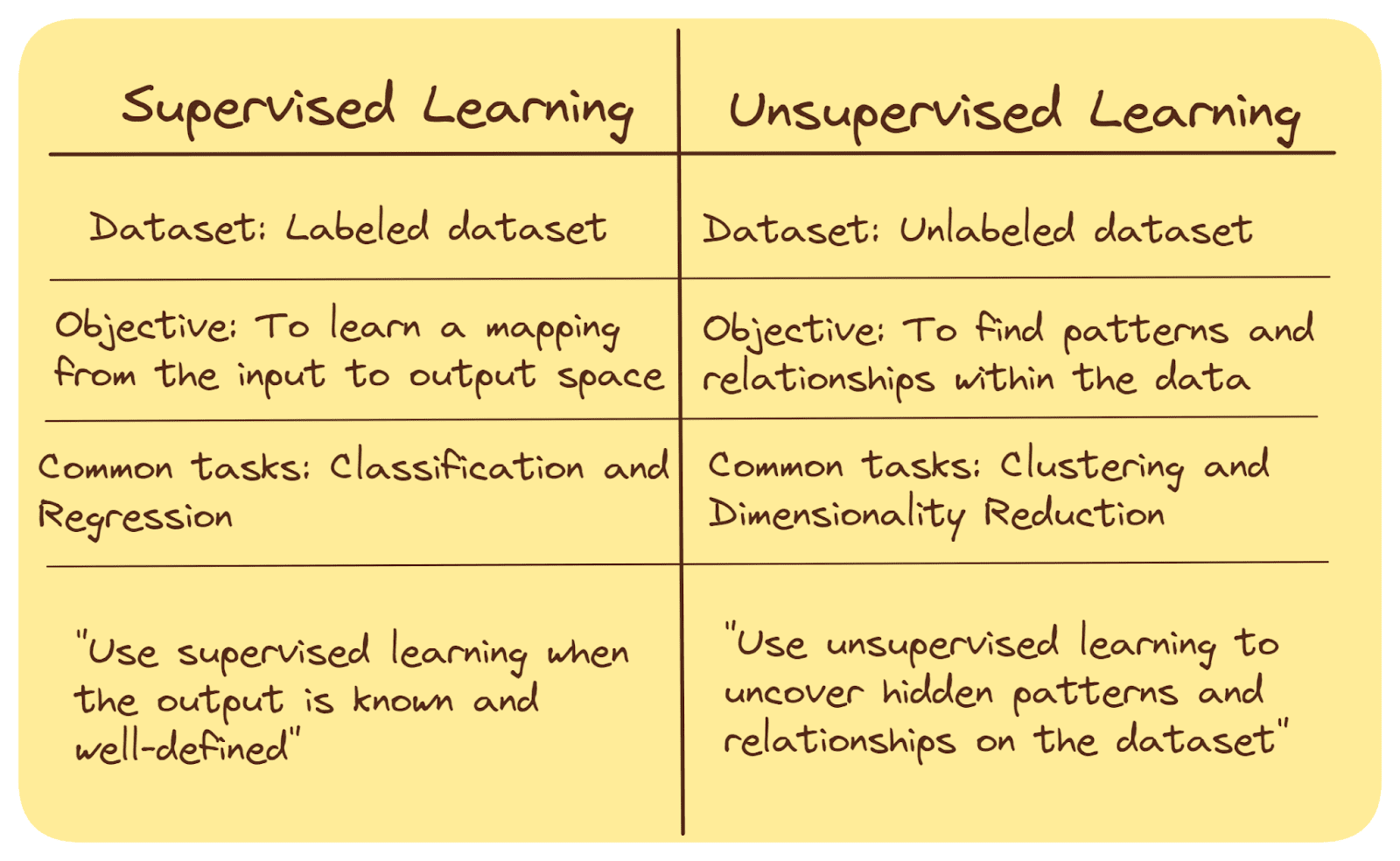

Machine studying is a subset of synthetic intelligence that might deliver worth to the enterprise by offering effectivity and predictive perception. It’s a priceless software for any enterprise.

We all know that final 12 months was stuffed with machine studying breakthrough, and this 12 months isn’t any totally different. There’s simply a lot to find out about.

With a lot to be taught, I choose a couple of papers in 2024 that you need to learn to enhance your data.

What are these papers? Let’s get into it.

HyperFast: Instantaneous Classification for Tabular Information

HyperFast is a meta-trained hypernetwork mannequin developed by Bonet et al. (2024) analysis. It’s designed to supply a classification mannequin that’s able to on the spot classification of tabular information in a single ahead go.

The writer said that the HyperFast may generate a task-specific neural community for an unseen dataset that may be straight used for classification prediction and get rid of the necessity for coaching a mannequin. This method would considerably scale back the computational calls for and time required to deploy machine studying fashions.

The HyperFast Framework reveals that the enter information is reworked by standardization and dimensionality discount, adopted by a sequence of hypernetworks that produce weights for the community’s layers, which embody a nearest neighbor-based classification bias.

Total, the outcomes present that HyperFast carried out excellently. It’s sooner than many classical strategies with out the necessity for fine-tuning. The paper concludes that HyperFast may grow to be a brand new method that may be utilized in lots of real-life circumstances.

EasyRL4Rec: A Person-Pleasant Code Library for Reinforcement Studying Primarily based Recommender Techniques

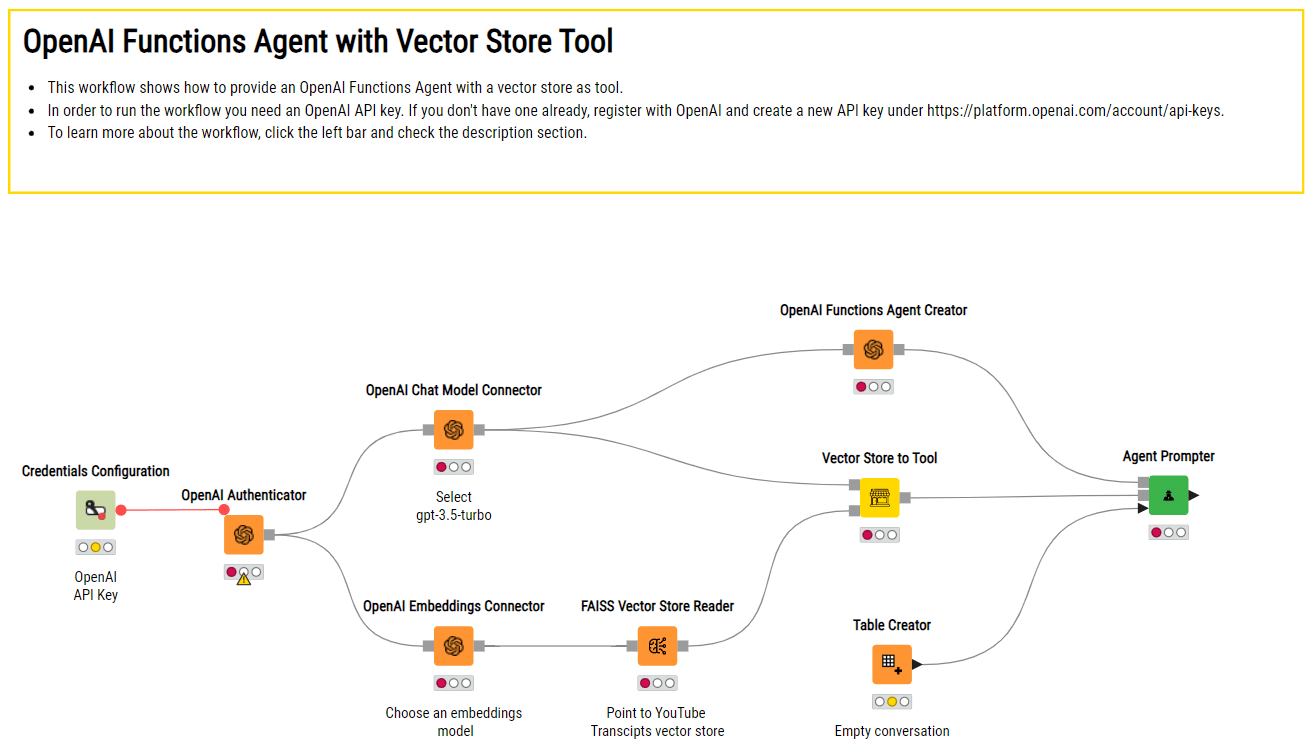

The following paper we are going to focus on is a couple of new library proposed by Yu et al. (2024) known as EasyRL4Rec.The purpose of the paper is a couple of user-friendly code library designed for growing and testing Reinforcement Studying (RL)-based Recommender Techniques (RSs) known as EasyRL4Rec.

The library gives a modular construction with 4 core modules (Surroundings, Coverage, StateTracker, and Collector), every addressing totally different phases of the Reinforcement Studying course of.

The general construction reveals that it really works across the core modules for the Reinforcement Studying workflow—together with Environments (Envs) for simulating person interactions, a Collector for gathering information from interactions, a State Tracker for creating state representations, and a Coverage module for decision-making. It additionally features a information layer for managing datasets and an Executor layer with a Coach Evaluator for overseeing the training and efficiency evaluation of the RL agent.

The writer concludes that EasyRL4Rec incorporates a user-friendly framework that might tackle sensible challenges in RL for recommender programs.

Label Propagation for Zero-shot Classification with Imaginative and prescient-Language Fashions

The paper by Stojnic et al. (2024) introduces a way known as ZLaP, which stands for Zero-shot classification with Label Propagation. It’s an enhancement for the Zero-Shot Classification of Imaginative and prescient Language Fashions by using geodesic distances for classification.

As we all know Imaginative and prescient Fashions corresponding to GPT-4V or LLaVa, are able to zero-shot studying, which may carry out classification with out labeled photographs. Nevertheless, it could nonetheless be enhanced additional which is why the analysis group developed the ZLaP approach.

The ZLaP core concept is to make the most of label propagation on a graph-structured dataset comprising each picture and textual content nodes. ZLaP calculates geodesic distances inside this graph to carry out classification. The strategy can be designed to deal with the twin modalities of textual content and pictures.

Efficiency-wise, ZLaP reveals outcomes that persistently outperform different state-of-the-art strategies in zero-shot studying by leveraging each transductive and inductive inference strategies throughout 14 totally different dataset experiments.

Total, the approach considerably improved classification accuracy throughout a number of datasets, which confirmed promise for the ZLaP approach within the Imaginative and prescient Language Mannequin.

Go away No Context Behind: Environment friendly Infinite Context Transformers with Infini-attention

The fourth paper we are going to focus on is by Munkhdalai et al.(2024). Their paper introduces a way to scale Transformer-based Giant Language Fashions (LLMs) that might deal with infinitely lengthy inputs with a restricted computational functionality known as Infini-attention.

The Infini-attention mechanism integrates a compressive reminiscence system into the normal consideration framework. Combining a conventional causal consideration mannequin with compressive reminiscence can retailer and replace historic context and effectively course of the prolonged sequences by aggregating long-term and native data inside a transformer community.

Total, the approach performs superior duties involving long-context language modelings, corresponding to passkey retrieval from lengthy sequences and guide summarization, in comparison with at the moment obtainable fashions.

The approach may present many future approaches, particularly to functions that require the processing of in depth textual content information.

AutoCodeRover: Autonomous Program Enchancment

The final paper we are going to focus on is by Zhang et al. (2024). The principle focus of this paper is on the software known as AutoCodeRover, which makes use of Giant Language Fashions (LLMs) which are in a position to carry out subtle code searches to automate the decision of GitHub points, primarily bugs, and have requests. By utilizing LLMs to parse and perceive points from GitHub, AutoCodeRover can navigate and manipulate the code construction extra successfully than conventional file-based approaches to unravel the problems.

There are two principal phases of how AutoCodeRover works: Context Retrieval Stage and Patch Era goal. It really works by analyzing the outcomes to test if sufficient data has been gathered to establish the buggy elements of the code and makes an attempt to generate a patch to repair the problems.

The paper reveals that AutoCodeRover improves efficiency in comparison with earlier strategies. For instance, it solved 22-23% of points from the SWE-bench-lite dataset, which resolved 67 points in a median time of lower than 12 minutes every. That is an enchancment as on common it may take two days to unravel.

Total, the paper reveals promise as AutoCodeRover is able to considerably decreasing the handbook effort required in program upkeep and enchancment duties.

Conclusion

There are lots of machine studying papers to learn in 2024, and listed below are my suggestion papers to learn:

- HyperFast: Instantaneous Classification for Tabular Information

- EasyRL4Rec: A Person-Pleasant Code Library for Reinforcement Studying Primarily based Recommender Techniques

- Label Propagation for Zero-shot Classification with Imaginative and prescient-Language Fashions

- Go away No Context Behind: Environment friendly Infinite Context Transformers with Infini-attention

- AutoCodeRover: Autonomous Program Enchancment

I hope it helps!

Cornellius Yudha Wijaya is an information science assistant supervisor and information author. Whereas working full-time at Allianz Indonesia, he likes to share Python and information suggestions by way of social media and writing media. Cornellius writes on a wide range of AI and machine studying matters.